Serverless has quickly become the hot new buzzword in the software development community, especially when it comes to the cloud and cost optimization. The ability to deploy your code into a cloud provider platform and paired with a consumption billing model, serverless and FaaS can be a very enticing solution for moving your code to the cloud. While there is certainly a benefit to developing your solution in this manner, there isn’t necessarily always that cost benefit over a solution such as running microservices on Kubernetes. If you have random fluxes of traffic coming through your system, the elastic scaling and costs associated with it make the most sense. On the other hand, if you have a consistent and predictable flow of traffic through your system, the cloud provider functions might not be the most cost-effective solution for your needs. So, if function apps aren’t the right solution for your needs what then might you be able to use? Why serverless Kubernetes of course!

What is serverless Kubernetes?

How does serverless Kubernetes work? Really, it runs the same as any standard serverless solution. Serverless applications, despite the name, still run on a server they just abstract the server configuration and infrastructure from the application allowing developers to focus on their application and not the infrastructure. I’m not going to go over in this blog how to install any of the various serverless frameworks for Kubernetes, there are plenty of resources out there that can walk you through installation and configuration.

There are a multitude of different frameworks you can choose for your serverless Kubernetes solution; OpenFaas, Kubeless (which is no longer maintained), Knative, Fission, and many more. While there are quite a multitude to choose from, most of them have the same foundational properties. Kubernetes itself is platform agnostic, meaning that you can write your application once and run it anywhere; locally, or on Azure, AWS, or GCP. This is no different for the serverless Kubernetes frameworks. These frameworks also support writing your solution in any language, so if you happened to have three different functions you could write one in Scala, one in C#, and the third in Go. Kubernetes doesn’t care what you use to write your application. Variety is the spice of life!

How do you create a serverless Kubernetes function?

As I mentioned earlier, there are plenty of different options for frameworks implementing Event-Driven or FaaS architectures within Kubernetes. With the future of Kubeless being unknown at this point, we will look at an example using KNative. KNative is a sponsored project by Google for developing Kubernetes native applications. One of the great benefits of KNative is that it will run wherever Kubernetes will run. As you’re developing a local solution using KNative this will run on the same Kubernetes setup that is running on your test and production environments. This leads developers to be able to trust that the serverless code they’re writing will behave the same locally and when it’s deployed. This framework also offers the ability to bring your own logging using solutions such as Fluentbit, monitoring, networking, and service mesh. KNative also supports multiple various frameworks from Ruby on Rails, to Sprint, to Django, plus many more!

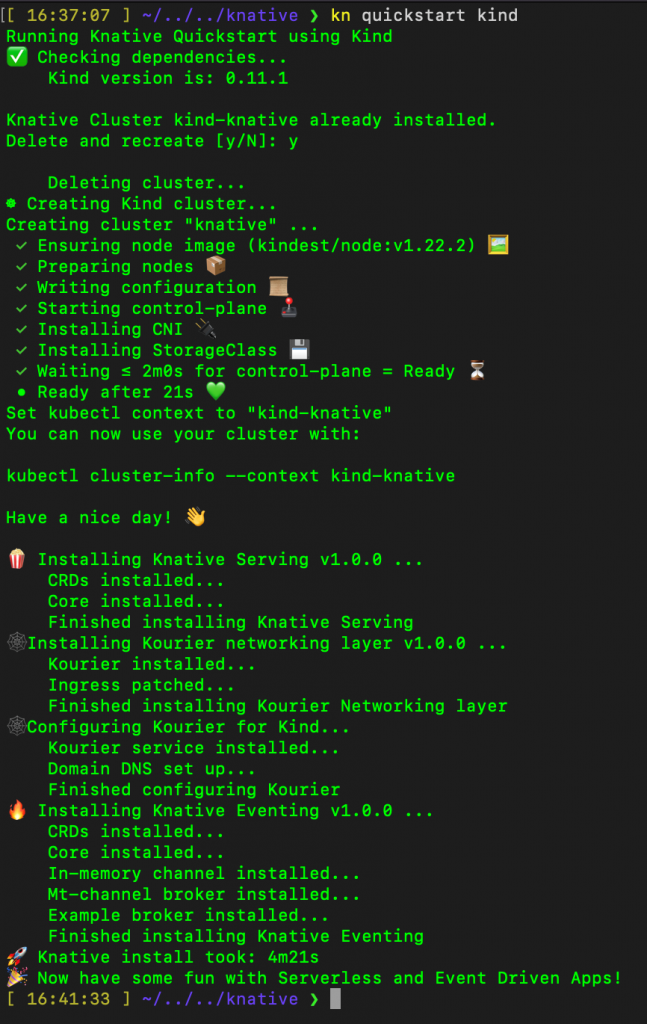

To demonstrate serverless Kubernetes I will show a simple example of setting up KNative with KinD to develop a serverless application locally. KNative now has a handy plugin called quickstart which will allow you to set up your local environment with a single command. After having installed KNative, you can create a new cluster by doing this command

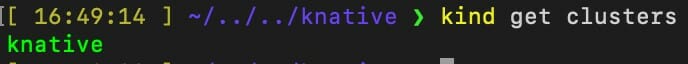

And then you can check with KinD to verify if the clusters have been created

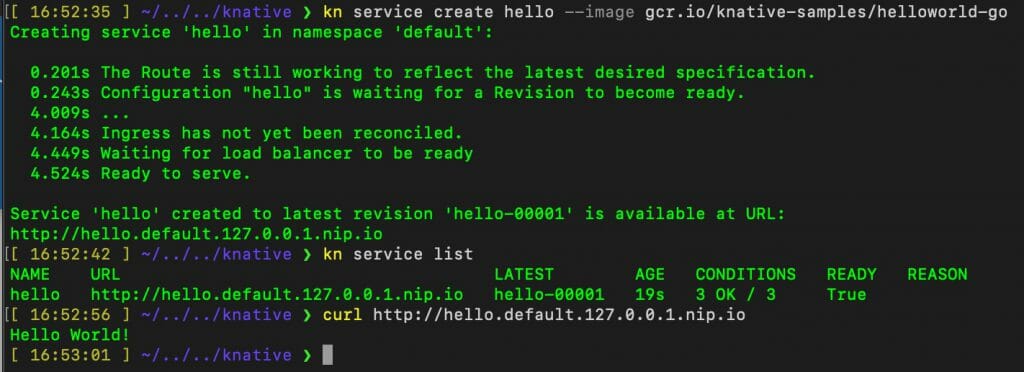

And now we can create a simple hello-world service and test it out

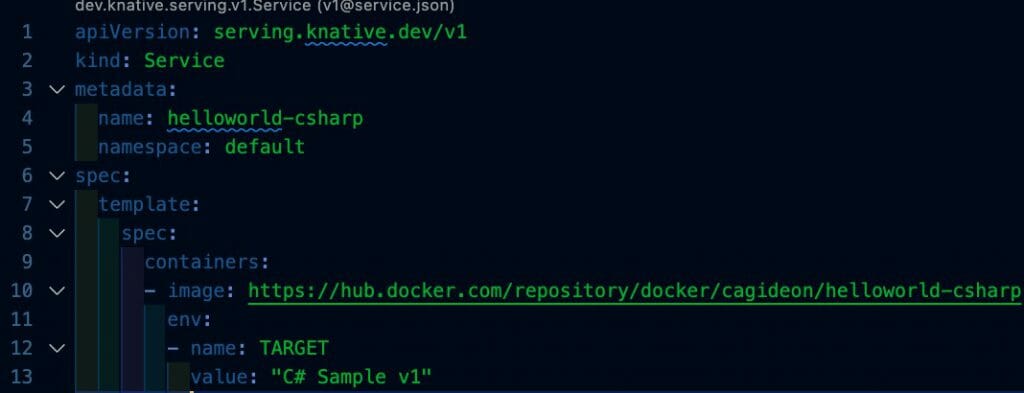

This is a very simple example of using an existing image to create a KNative pod and service! You can also create your own KNative application by pointing to a Docker image on your own repo in Docker Hub. In order to pull this custom image into your KNative project you would change the image value in your service.yaml to point to whatever image you wanted to pull. It’s that simple!

As you can see in the service.yaml above, we are pulling the image from my Docker Hub repository and setting the TARGET environment variable to “C# Sample v1”.

We then use kubectl to apply our service.yaml to create our service.

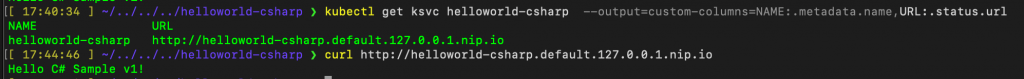

After our local service is set up, we are ready to get the URL and test.

And there you have it! A custom Docker image, loaded into a KNative instance running locally. It’s as simple as that!

In conclusion

Serverless Kubernetes provides an interesting solution to the modern trend of serverless application development. Not every problem requires the use of a serverless platform such as AWS Lambda or Azure Functions. Being able to write your serverless application to be run in Kubernetes provides a necessary second option for modern development needs. If your solution doesn’t require the overhead of a platform such as AWS Lambda or Azure Functions, give serverless Kubernetes a try!

English | EN

English | EN