In Part I of the blog, we explored the language models and transformers, now let’s dive into some examples of GPT-3.

What is GPT-3

GPT-3 is the successor of GPT-2 sporting the transformers architecture. It’s trained on 40GB of text and boasts 175 billion that’s right billion! -parameters (the values that a neural network tries to optimize during training for the task at hand). Compared to GPT-2 it’s a huge upgrade, which already utilized a whopping 1.5 billion parameters. GPT-3 shows that the performance of language models greatly depends on model size, dataset size and computational amount. It’s trained similarly as GPT-2 on the next word prediction task.

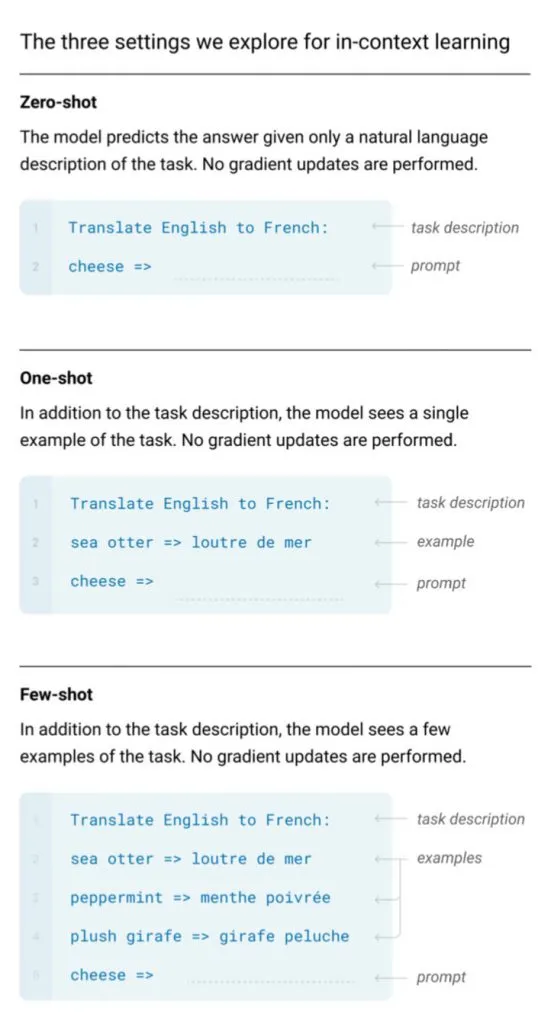

What sets GPT-3 apart from the rest is that it’s task agnostic. That means that it can perform tasks without using a final layer for fine-tuning. It works straight out of the box and is able to perform tasks with minimal examples (called shots). Below is shown how this works.

So, what can GPT-3 do? There’s a playground demo on which you can register (https://beta.openai.com). In summary you can address chats, question answering, summarizing of text, conversations, code writing, semantic search and many more. Also, GPT-3’s strength lies in its capability to handle non-English languages, especially for text generation.

GPT-3 Examples

Besides just creating text, people found that GPT-3 can generate any kind of text, including guitar tabs or computer code. Others have shown that GPT-3 is the most coherent language model to data. Let’s check out some examples:

We can GPT-3 to create HTML layout(s) as shown by Sharif Shameem:

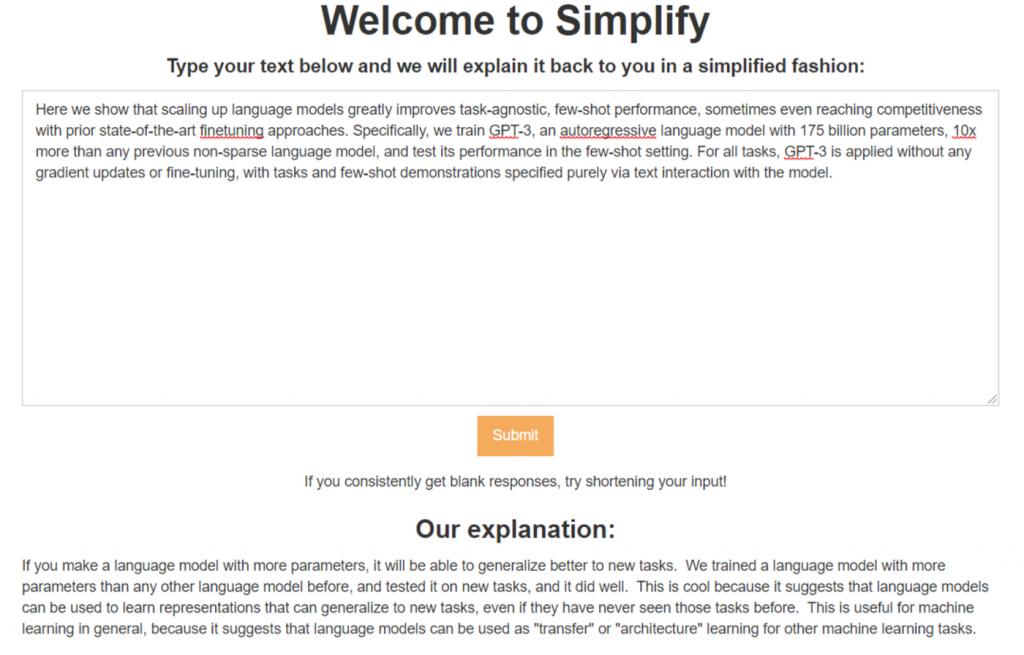

Summarisation has been build around the API by Chris Lu:

Also, GPT-3 scores well on the Turing-test, the common-sense test for A.I.. It’s pretty capable of answering those questions as shown below:

It can parse unstructured data and organise it neatly for us:

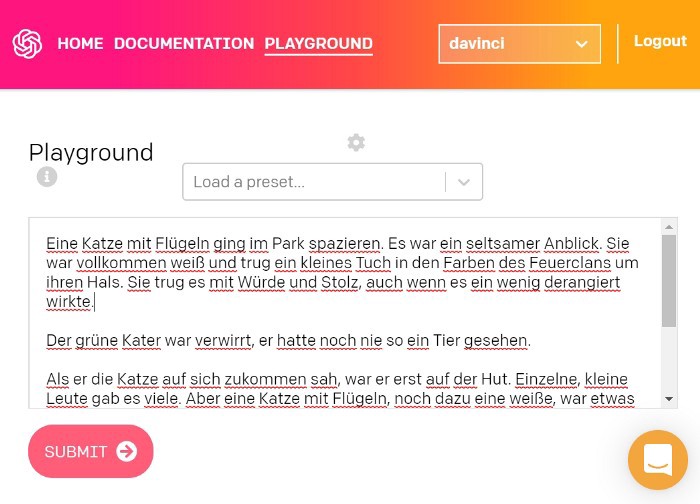

And, finally let’s show its power in terms of language generation. We’ll start with German. Vlad Alex asked it to write a fairy tale that starts with: (“A cat with wings took a walk in a park”)

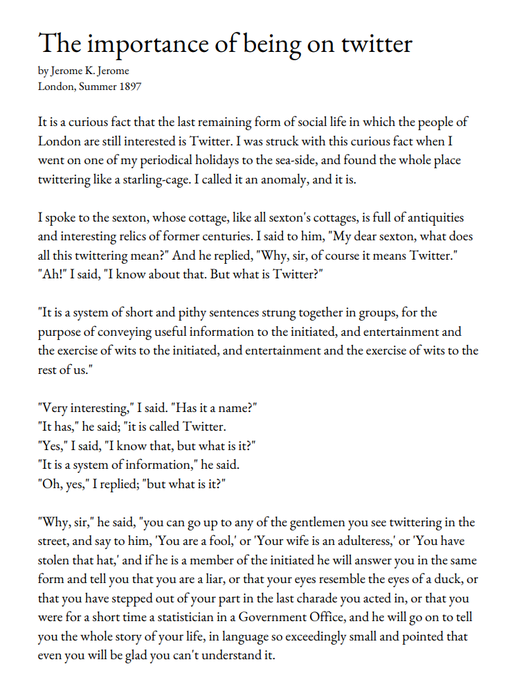

A final example in English shows that GPT-3 can generate text on the topic of “Twitter”. Moreover, it’s been written in the style of 19th-century writer Jerome K. Jerome.

In the overview provided by these interesting examples, we’ve seen that GPT-3 not only generates text in multiple languages but is also able to use the style aspect of writing. If you’re interested check out these other examples:

- Search engine:

- The biggest thing since Bitcoin: learn more

- Building websites from English descriptions: learn more

- A natural language command line shell: learn more

- Taking a Turing Test: learn more

- Medical diagnosis: learn more

- Resume writing: learn more

- Presentation writing: learn more

- Excel spreadsheet function: learn more

- On the meaning of life: learn more

Summary

Despite the fact that we’re still at the beginning, and a wonderful beginning this is, we’re seeing great experiments with GPT-3 that display its power, impact and above all potential. GPT-3 shows the immense power of large networks, at a cost, and language models. It’s capable of rephrasing difficult text, structure text, answer questions and create coherent text in multiple languages. All while working straight out of the box. Of course, there are improvements to be made and downsides. And, there’s still use for BERT, ERNIE and similar models on which we’ll talk in later blogs.

NLP has been behind in comparison to other trends such as image recognition wherein huge training sets and models have been made publicly available with great accuracy and results. NLP is now on the verge of the moment when smaller businesses and data scientists can leverage the power of language models without having the capacity to train on large expensive machines. Natural language processing models will revolutionize the way we interact with the world in the coming years. All-in all, GPT-3 is a huge leap forward in the battle of language models.

English | EN

English | EN