In a world where Artificial Intelligence (A.I.) is the mantra of the 21st century, Natural Language Processing (NLP) hasn’t quite kept up with other A.I. fields such as image recognition. However, recent advances within the applied NLP field, known as language models, have put NLP on steroids.

The reason why we’re here is because machine learning has become a core technology underlying many modern applications. This is especially true in utilizing natural language processing. Since word embeddings and its ground-breaking paper (Word2Vec, 2017, Mikolov et al.) we’ve arrived at a transformative stage: meet transformers.

No, we’re not talking about the latest Michael Bay film starring Transformers, but our transformers had a similar explosive impact on the NLP industry. With language models like BERT and ERNIE we’ve seen a recent trend of increasing size for language models. But is size always better? The GPT-3 model by OpenAI has taken it up a notch by creating a monstrosity in terms of size and performance and it shows that when it comes to language models size really does matter. This blog will provide a gateway into language models, its algorithms, and GPT-3.

“Extrapolating the spectacular performance of GPT-3 into the future suggests that the answer to life, the universe and everything is just 4.398 trillion parameters.”

– Geoff Hinton

Language models and transformers

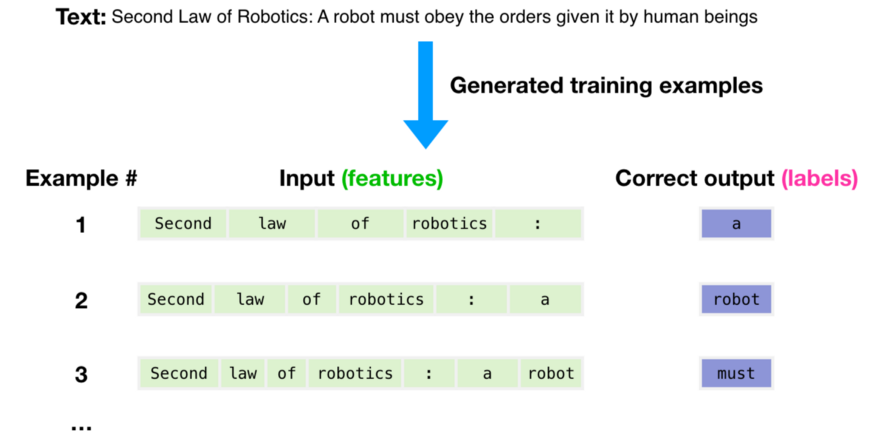

Before we can dive into the greatness of GPT-3 we need to talk about language models and transformers. Language models are context-sensitive deep learning models that learn the probabilities of a sequence of words, be it spoken or written, in a common language such as English. With these probabilities, it then predicts the next word in that sequence, known simply as “next word prediction.”Here’s how that’s done:

The “how” of the language models rests on the shoulders of the algorithms of choice. Before transformers, many models used Recurrent Neural Networks (RNN) or (bi-directional) Long Short-Term Memory (LSTM). If you want to read up on those check out the following links:

Illustrated Guide to Recurrent Neural Networks

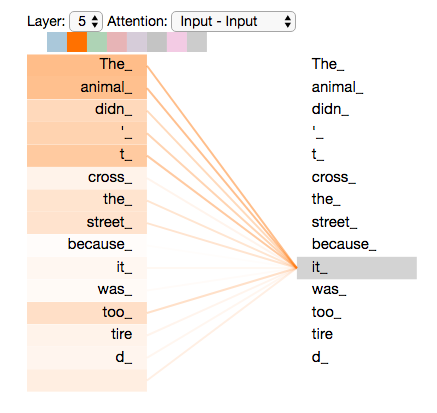

Transformers use something called attention, introduced by the paper “Attention Is All You Need” from 2017 (Vaswani et al.) which revolutionised algorithms for language models.

The mechanism of attention is best explained by looking at the biological side, or how we humans solve this. I always like the comparison with the human mind, since it makes the entire process slightly more natural. As our eyes make movements over a text, or even images, we focus, i.e. attend, to certain parts of interest. In text we use this to establish dependencies between words and sentences and keep track of the history. Our “understanding” of language is used during reading a text to focus on those important bits and pieces to help us interpret text, read a coherent story and answer questions even after reading.

Transformers with attention adopt a similar technique; they’re able to focus on the most informative and relevant (at that point in time) parts of a sequence of text for further calculations.

If you’re more of a video learner check out this great video on Transformers:

Language models are used for a wide range of tasks:

- Translation

- Named Entity Recognition (NER) — great for anonymizing text

- Question Answering Systems

- Sentiment

- Text Classification

- Language Generation

Now that you’re up to speed on transformers, we can dive into the exciting world of GPT-3 in Part 2 of the blog!

English | EN

English | EN