Introduction:

A SharePoint list is a simple collection of data that you can easily create and share with your team members. Lists are found in both on-premises and cloud versions of SharePoint. There are many ready-to-use list templates like tasks, surveys, contacts, and issue tracking. SharePoint List is useful for both business users and technical users. In one of our current projects, we use it along with Power APPs and Power Automate for Tabular model dynamic row level security setup (this will be another topic for a future blog J).

Before SharePoint Online List connector for ADF was available, we used to use SQL Server Integration Service (SSIS) to copy SharePoint List using OData Source to connect to SharePoint List.

In this blog, I will show you how to copy SharePoint Lists using Azure Data Factory. And to make copying multiple lists more dynamic, we created control tables along with parameters. Then we assigned parameters with values of OData query statements for copying SharePoint Lists.

Prerequisites:

- App registration

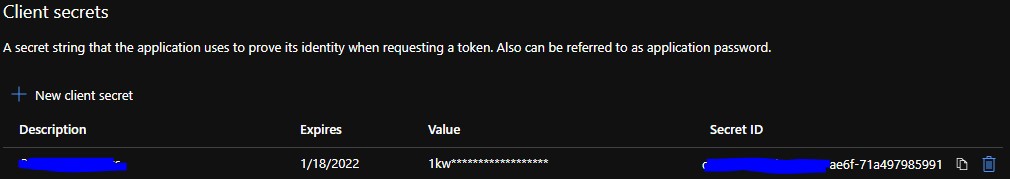

In order to connect to SharePoint, the SharePoint List Online connector uses service principal as the authentication method. So, we need first to create an app registration in Azure Active Directory and copy Application ID, Tenant ID. Secondly, create a new client secret as show below:

2. Grant SharePoint Online site permission to your registered application

For this step, Microsoft provides a good document. One thing I want to mention is that please make sure this step is performed by a person who has the SharePoint Online site owner permission. The developers usually don’t have that permission.

You can check it (little different from MSFT doc) by going to the site home page -> click “Settings” in the right corner -> then click “permissions” -> check “Site owners – full control” to see who is the “Owner”.

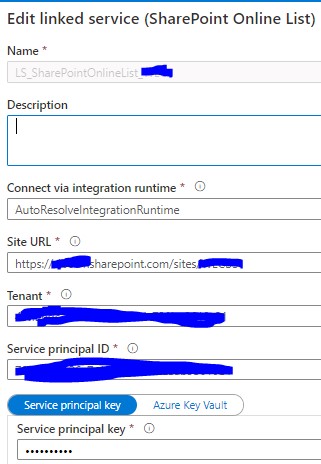

3. Create Linked Service

We use SharePoint Online List connector to create a Linked Service for SharePoint lists online, the configuration should like this.

Pipeline and dynamic loading configuration

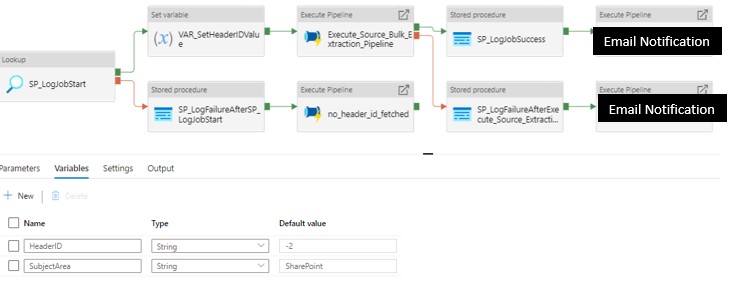

- Overall high-level pipeline setting

In the main pipeline, we include logging steps to write all Azure pipeline activity into a database table, so we can refer it when we want to do error debug and diagnosis. Another useful feature we added is the email notification for pipeline running status using Azure Logic Apps.

To copy multiple SharePoint lists using one pipeline, we created “bulk extraction pipeline” which in turn will call “copy each list to adw” (Screenshot in section 2 below).

2. Dynamic copying with Lookup and ForEach activity

In the above pipeline, we use Lookup activity to get the list of items that need to be copied. Then we use ForEach loop to copy each item one by one and loaded into the destination storage/database (Azure Synapse Analytics (ADW) in our case).

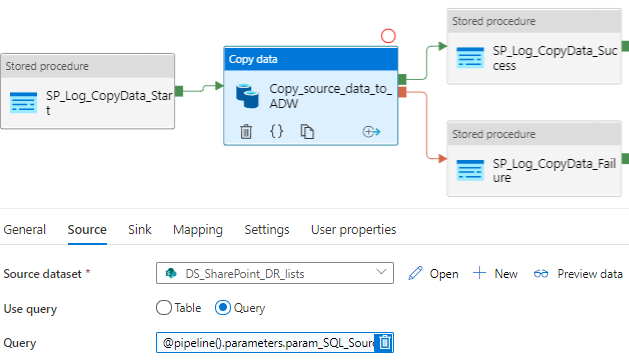

3. OData Query to fine control source data

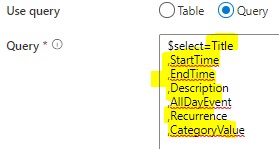

As shown in the screenshot below, the source of Copy activity is set to use Query. In the query itself, we are using parameters to store each query. We are all familiar with SQL query for getting data, and luckily enough, like SQL query, we can use OData query to select, filter the SharePoint list data.

Here is the link for the OData query documentation. The most common ones used are $select, $filter, $top, $orderby. Using OData query can help the creation of dynamic pipelines using parameters. Another advantage of using OData query is that we can select only columns you need, not the system generated metadata columns into your destination table.

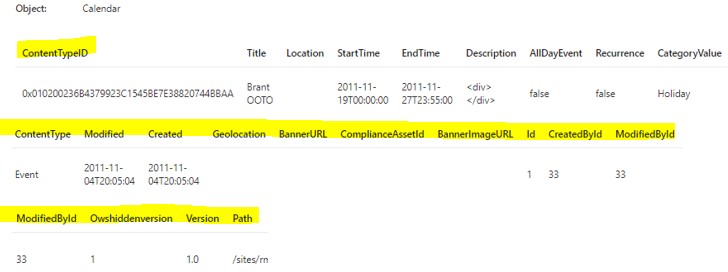

If table was used as the source for Copy activity, most of metadata columns (like Modified, CreatedById, etc. as highlighted above), that might not be needed to copy to the destination, were selected too.

While using OData query, we can select only the columns we need (see screenshot below). On top of that we filter and order data too.

Below is a simple example of how we create a control table and how we put OData query as a parameter value inside the control table. Please add a comment if more details needed.

Summary

With more and more connectors, activities, and functions are being added to Azure Data Factory, it’s more and more easier to use ADF as a tool not only to orchestrate data flow but also to do transforms (please see my previous blog for one example). In the upcoming blogs, I will continue to summarize our real-life data solutions using Azure Data Factory as well as other Azure Data Services.

English | EN

English | EN