“Just vibe it.” It sounds like something you’d hear at a music festival, not during a software (architecture) review. But here we are. Welcome to the world of vibe coding.

If you’ve been to a tech conference recently, opened LinkedIn, or scrolled Reddit for even a few minutes… you’ve seen it.

Vibe coding. Or should we say vibecoding? The buzzword of 2025. Born in a tweet from Andrej Karpathy back in February 2025, this term has vibed its way across the dev community faster than your IDE can autocomplete console.log.

But what’s actually behind the hype? Is it the next-gen programming paradigm? Or just another fleeting trend destined to be buried next to the blockchain-for-everything startup? Let’s dig in.

New Term, Huge Impact

Vibe coding is the idea that you no longer write code – you describe it. Think of it as prompt-driven software development. You map out what you want in natural language, throw it at a Large Language Model (LLM), and boom: it generates the code for you. You test it, “accept all,” and move on. If it fails? You prompt the error. LLM fixes it. Rinse and repeat. “And forget that the code even exists”.

It’s AI-dependent. It’s fast. And it’s happening everywhere. Absolutely no coding skills required.

At conferences, I’ve seen devs using Cursor (AI Code Editor) like a glorified notepad – barely scratching the surface of what these tools can do. But hey, it’s a vibe. They roll with whatever comes out of the box. No structure. No context. Just… vibes. And then, when the output isn’t great, they blame the tool. Not the vibes.

And it’s not just developers doing the whole vibe coding thing. Non-devs are diving in too: spinning up apps straight from a blank page to production like it’s a TikTok challenge. No tests. No security. No reviews. Just vibes. And guess what happens next? The server crashes. No fallback. No logging. Sometimes the database is wide open: leaking emails, passwords, maybe even grandma’s cookie recipe. Or they’re out here sharing their localhost URL with friends: “It works on my machine!” Or what about this one… “Guys, I’m under attack,” said the developer who made all API keys public in their app. Oops.

The internet is full of horror stories like these. But hey! It’s a vibe!

So, What About Quality?

Here’s the thing.

Vibe coding moves fast. But it sacrifices control.

Top engineers are already turning away from this approach when building serious systems. Why? Because quality isn’t guaranteed. And when LLMs aren’t trained on the latest security patches or architectural patterns, things get risky real quick. Someone without any coding knowledge might not know how to apply the latest security insights, which means security could be at risk, since the latest threats might not be addressed.

This isn’t about resisting change – it’s about not handing over the steering wheel blindfolded.

So, Ditch It Altogether?

Not so fast.

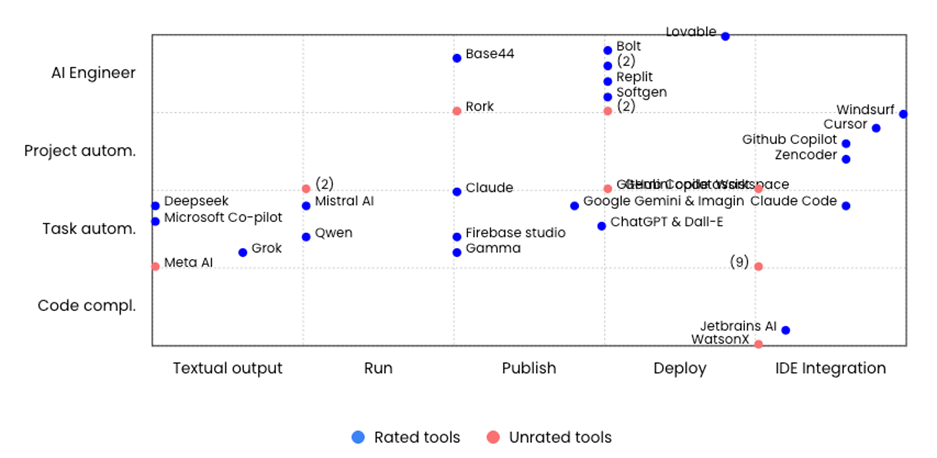

I’ve been deep in the Generative AI coding tool ecosystem for over half a year now (September, 2024). I’ve tested dozens of platforms: Lovable, Bolt, Replit, Base44, V0, Copilot, Jetbrains AI, Github Copilot, Cursor and Windsurf (currently my personal favorite). From AI-first coding sandboxes to IDE-integrated copilots. I mapped them with many others on a quadrant, to clearly see how they’re involving:

(in this quadrant, the y-axis shows the programming score, and the x-axis the review score (or IDE integration score for the last column). Up-to-date community-driven quadrant can be found on craftingaiprompts.org)

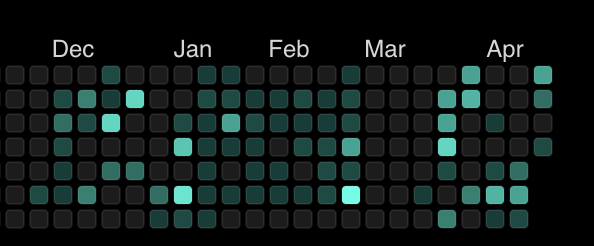

I used Windsurf, for example, almost daily from November onwards (in March, there was a bug in Windsurf that didn’t track completions: vibe coded?).

So, what have I learned?

From Vibe Coding to AmpCoding

Vibe coding? Perfect for MVPs, quick experiments, generating front-end components, or helping non-tech stakeholders bring their ideas to life. Take a product owner, for example: they can now “speak developer” by vibe coding a concept and handing it over to the dev team as a starting point. No coding experience required. But for serious product teams? For large-scale codebases and long-term maintainability? We need something stronger.

That’s where AmpCoding comes in: Amplified Coding.

AmpCoding sits within the broader movement of Agentic Programming – where developers and Generative AI work together in a tight feedback loop. But unlike Vibe Coding, which lets the AI lead, AmpCoding puts the expert in the driver’s seat.

You guide the LLM with clear system prompts, context, architecture, and constraints. It’s not “accept all.” It’s “review with intent.”

You’re not replacing expertise: you’re amplifying it.

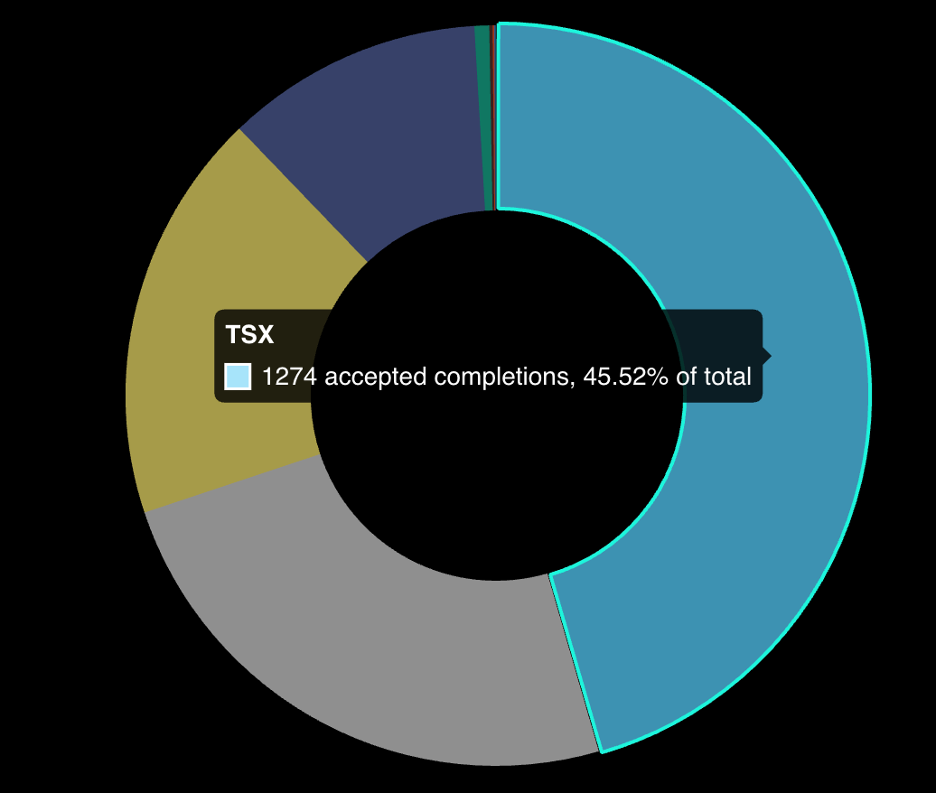

And let’s be real: based on my own stats, I only accept 45.52% of the generated code based on 1274 completions (following the Windsurf stats)! The rest? I refine, reject, reshape or code myself. AmpCoding is MUCH faster than Vibe Coding. You might not notice it right away, but hello, technical debt!

Generative AI helps. But it’s still your name on the commit.

Generative AI helps. But it’s still your name on the commit.

How I Use AmpCoding (and You Can Too)

Let’s say I have an idea, but no code yet. If I really have no clue, I might even start with ChatGPT to help ideate. But if I do have a direction, I start in Base44 or Bolt. I prompt it with what I want, map out the logic, and let it run wild. It builds the foundation. Not beautiful – but that’s the point: just vibecoding.

Then I take the useful parts (code) into my IDE – usually Windsurf – and use it as context with my prompt to technically integrate it into my app. Sometimes I even include a screenshot of the output from the previous tool for extra clarity. This is where the real magic happens.

Inside my repository, I’ve added (global) rulefiles: simple .md system prompts that define how we code. What libraries we use. Programming Languages. How we structure files. Design choices, Definition of Done, and code style rules – clean, small, and testable. It works across the team, because you just drop it into the repo. I include this in my prompts too, along with contextual files like components or classes to steer the LLM. Alternatively, I create a planning.md file: a structured outline of the feature I want to build, step by step.

When the LLM outputs code, I don’t blindly “accept all.” I use Windsurf’s GitHub-style UI to review changes. If something doesn’t feel right, I tweak it myself or prompt it smarter.

That’s how I stay in control – and move fast. I’m the expert, amplified by AI.

Every prompt (prompt credits), and sometimes every action (flow credits), burns credits. I ran out in 10 days without this approach. Now? I’ve optimized for speed, quality and cost. With the right Prompt Engineering, I summarize progress in .md files or generate diagrams for reuse. That helps future prompts, documents the process for others, and scales across large codebases. I often generate components with tools like Base44, then use those as context in Windsurf or planning files. Context is king!

Planning files are a game-changer. With just one prompt – and the planning file – I can generate most of a feature. That’s one prompt-credit for a full build, instead of many. And think about this… what if you write your user stories as if they are planning .md files?

I even switch between models depending on the job. Currently? GPT-4.1 for backend. Sonnet 3.7 for frontend. Sonnet 3.7 when using planning files (and sometimes the latest Gemini model). All orchestrated intentionally. And when I am done, I update my AI Changelog .md file which I can include if I want to provide the latest changes to the LLM (fun fact: this can also be automated using the rules file). I also keep track of the tools’ implemented memory to ensure that nothing is stored that might conflict with my global rules, planning documents, or changelog files.

And the final touch? Yep! That’s me. The one pulling the strings, orchestrating models, optimizing credits (still Dutch, after all…), and building systems that scale. With the right planning, the right tools, clever prompting and my coding expertise, I’m not just using AI: I’m engineering with it. I’m in control now. It amplifies me.

Refactor or Flush your code?

One surprising insight I’ve gained: LLMs are often better at generating fresh code than refactoring existing code. As developers, we’re wired with a “refactor mindset.” So am I. We see messy code, and we want to clean it up – that’s a good instinct. Right? But this isn’t always where LLMs shine. Instead, I’ve started using a technique I call “flushing code.” Rather than asking the model to refactor an existing block, I prompt it to rewrite the functionality from scratch (flush it) – in a clean, new file – and then replace the old version entirely (or the tool does this automatically by creating a .bak file). Why? Because LLMs tend to produce higher quality output when they’re free from the constraints of legacy structure. I can also guide it by incorporating the latest and greatest advancements, which adds an extra layer of quality – especially now, with all the new possibilities introduced by MCP (Model Context Protocol) Servers. It allows you to connect your IDE (and LLM) directly to a range of useful resources and tools, like up-to-date framework documentation, security risk databases, and more.

So now, I consciously ask myself: should I refactor this – or flush it? But that mindset shift took time. Trust me. And yes, as a Software Engineer, you probably understand just how important tests become in moments like these.

From Vibing to Leading: The Future is Amplified

Vibe coding is real – and for the right use cases, it works. It’s perfect for rapid ideation, MVP’s, low-stakes prototyping, giving non-tech stakeholders a voice in the process and much more.

But when the stakes are high? When you’re building products that demand quality, security, and scale?

You don’t want – just – vibes: you need precision, structure, and control.

That’s where AmpCoding comes in. It’s not about replacing developers. It’s about amplifying skill, speed, and impact.

Let Generative AI do the heavy lifting: but you stay in the driver’s seat. The future isn’t just automated. It’s amplified.

What’s your take? Are you vibing? Amplifying? Or just coding? Drop a comment and let’s talk.

English | EN

English | EN