If you are to believe cartoons and old timey comedy slapstick humour, the protagonist putting on a moustache was enough of a disguise to fool people into thinking they are someone completely different. Of course, the comedy lies in the complete absurdity of that moustache being able to change someone’s identity completely. But what if you are a machine learning algorithm? As it turns out, it is not so funny anymore as it becomes more fact than fiction.

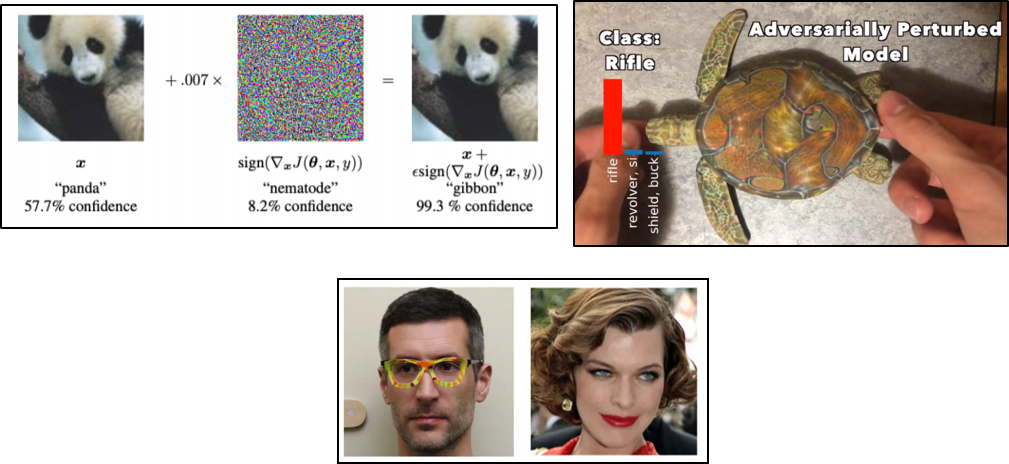

Adversarial attacks against deep neural networks try to do exactly what our protagonist is doing — to fool the model into thinking it is someone else. A paper done by Szegedy et al. in 2014 was the first to note some interesting weaknesses of deep neural networks, for instance that they can be easily fooled by adversarial examples into giving the wrong output. Studies of this weakness have turned a panda into a gibbon (Goodfellow et.al, 2014), a turtle into a rifle (Athalye, Engstrom, Ilyas and Kwok, 2017) and a person into Milla Jovovich (Sharif et.al, 2017).

In an adversarial attack, what the attacker attempts to do is to get the model to give a wrong prediction or classification. In a real world scenario, the attacker could want to fool a facial recognition model to make it think it is someone else in order to avoid detection. This is similar to what the researchers Sharif et.al attempted to do by using glasses specifically designed to add extra pixels to someone’s face so that the model would classify this person as someone else. In the simplest sense, an image recognition model learns patterns and pixel values associated with the images that the model is designed to recognise — cats or apples, for example. The model will train on images of cats and apples and we tell the model what it is looking at is a cat or an apple. Eventually, when we show a new image of an apple to this trained model, the model will need to decide if the patterns and pixel values correspond more to a cat or to an apple. However, I can add some extra noise and change pixel values to the original image of an apple to trick the model into thinking it is looking at a cat instead. I can do this if I can somehow know how the model separates what is a cat and what is an apple. This information can be inferred if I know details of the model or even the prediction scores the model gives to any image that it gets. Below is an example of how an input image can be changed to get a model to give it another result. These changes to an input are called perturbations and a perturbed image designed to fool a neural network is called an adversarial example.

Adversarial examples are designed by changing the inputs to a machine learning model ever so slightly, sometimes not even noticeable to humans. This could be very minute perturbations to pixels in the images to confuse the model into classifying it into another class or it could be physical additions to the image like in the case of the glasses and the 3D printed turtle. The implications of this is far more sinister when we think about adversaries using this weakness for malicious purposes. The examples so far have all been image related but the same can be done to text models, audio as well as to confuse reinforcement learning agent models.

There are different kinds of attacks on machine learning models:

– White box attacks assume that the attacker has all knowledge of your machine learning model including its structure, parameters and inputs.

– Black box attacks are different in that they assume that the attacker has no knowledge of the internal workings of the model and can only give an input to the model and see the output result/decision of the model.

– Finally, grey box attacks are when the attacker creates adversarial examples from another model that is known to the attacker (white box) to attack another black box model.

Toughening up

There are several strategies to employ in order to defend against such adversarial attacks. Broadly speaking, one can either focus on improving the AI/ML model and making it more robust against attacks or one can focus on removing any malicious perturbations on the inputs before sending it to the AI/ML model. In a paper by Qiu et.al (2020), they suggest using pre-processing and data augmentation strategies on the inputs to remove any malicious perturbations before sending it to the model. To this end, the researchers have developed a toolkit called FenceBox that allows the users to select from different processing and augmentation strategies to help mitigate against most attacks. The main advantage of this strategy is that it is simple to implement and does not cost too much in terms of computational costs since no retraining of models is required. The disadvantage is that it cannot prevent against some advanced attacks.

Strategies focused on improving the AI/ML model aim to retrain existing models. The most effective strategy to defend against adversarial attacks is adversarial training where adversarial examples are generated and added to the training data when training the model. Think of it as how boxers train with other boxers to improving their skills. Training this way ensures that the model does not get fooled by malicious inputs as it has already been trained on them. Training with examples of adversarial/perturbed images along with clean images increases robustness of models. This means that the model can perform better on new and unseen data. It can, however, reduce the accuracy of a model on clean/non-malicious images so there is a small trade-off when using adversarial training as a defensive strategy.

There are several open source packages available that benchmark AI model vulnerability against attacks like the CleverHans library. For text models there is a python framework called TextAttack which finds vulnerabilities in your models and allows you to do adversarial training to make your models more robust. Finally, there are also new techniques using GANs to generate adversarial examples for your model to then use for adversarial training like the ones researched by Jalal et.al (2019), Xiao et.al (2019) and Bai et.al (2021) to highlight a few.

There are other practical steps that AI/ML model developers can do to prevent attacks like avoid showing probability scores. When confidence scores are provided to the end user, a malicious attacker can use them to numerically estimate the gradient of the loss function and therefore be able to create adversarial examples. The best strategy can be a combination of the above but it is something that needs to be built into the design when building AI/ML models. At Sogeti, we use a Quality AI framework which is a framework to guide the AI/ML developer to think about important topics around ethics, transparency, bias etc. Adversarial training is one of those important topics that needs to be considered in the development cycle to ensure we are building responsible robust and tough models.

English | EN

English | EN