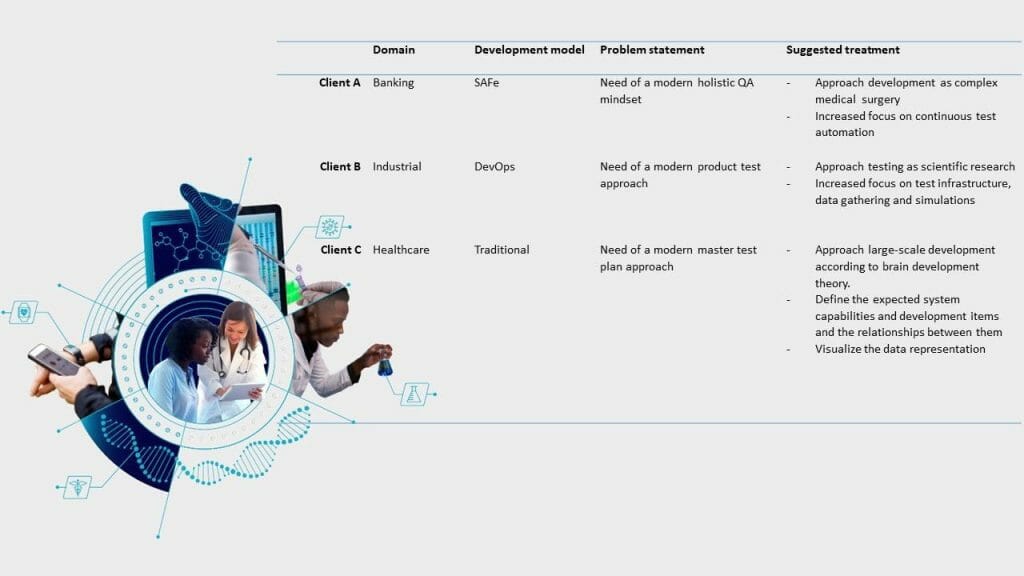

During the recent six months I had the privilege to work with three great organizations on how to tackle an increasingly complex development landscape.

Below is a brief description of each:

In the first – Client A – a fintech company development organization, we were working according to SAFe development framework, and I found that the testing and development process maturity were on an acceptable level. However, the development teams were focusing first and foremost on the development of the components that they were responsible for – with the negative effect that the high-level test environment continuously broke down due to unforeseen dependencies. So – Instead of trying to establish a traditional mechanical test strategy to stop bugs from spreading (with the likely effect of slowing development down), I based my strategy on the narrative that our system landscape was to be seen as our “patient” to care for and each component manifested a living organ for us to operate on – much like open surgery on the digital twin of our own business.

In the second case – Client B – I had the honor to help establish a product test team for a R&D department within industrial automation. Since this was a new team in a rather traditional organization with modern development aspirations, we were also asked to challenge the established mental models. In this context I found it to be easier to talk about the scientific approach tied to software development. Since our job was to verify the various capabilities of the product, our ambition was to create a vast number of simulations in order to push the boundaries for product and foresee the future obstacles to send feedback continuously back to the development teams. By focusing first on the infrastructure of the automated test framework in order to make it autonomous and containerized we enabled it to be executed in parallel instead of sequentially. Our second focus was to handle all the needed data in a controlled way to establish a data repository and gather all our test results, application and server logs, development data and diagnostics in.

In the third case – Client C – I was part of a team that were to be establish a master test plan where an entire application framework was to be rolled out to support the business of healthcare clinics in a Swedish county. Since this type of large-scale projects consists of thousands of newly developed features, integrations, infrastructure and provides endless variants of business experiences, we treated each development item as a node in a complex network of tasks and started to show how a modern development plan is based on a data model representing these masses of nodes and countless relationships, much like how synapses and neurons holds a nervous system together and how it evolves.

Tied to these various experiences I’ve noted down a handful of unstructured observations below on how we as an organisation shifted our approach to various aspects in software development – just by trying to see our development landscape as something complex.

Observation no1: Changed perception on test automation

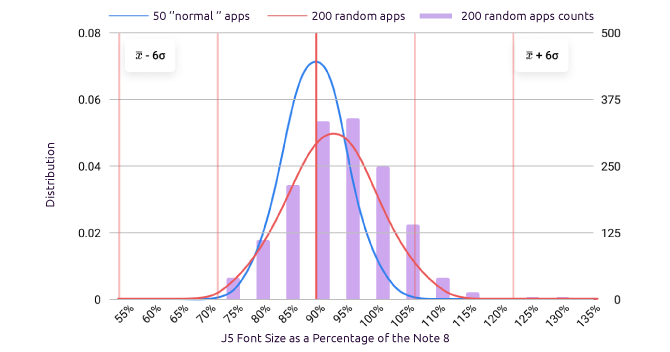

As an effect of seeing the development landscape as something complex, we start to see test automation from another angle. From something that we execute exhaustively at the end of our development to something that can provide us with continuously updated information _during_ development. As soon as a change in the source code can be noticed, we have the technical ability to include that change in our simulations in order to provide a prognosis and multiple predictions back to the developer around the possible impact that this change might lead to. The deep interest of this type of relationship to test automation comes firstly after we as an organization understand the digital version of Newton’s 3rd physics law: for every action – there is a reaction. For complex situations, this reaction can sometimes be predicted – and sometimes not. If we embrace complexity, we will inevitably become curious about what the possible reactions might be as an outcome of our actions. This informational need can be fulfilled by a small test selection of your most business-critical checks and scenarios and to continuously execute these checks with a high frequency. As a matter of fact, I constantly ask that question when assessing any team or organization’s maturity in test automation – at what frequency are you executing your scripts? This question often offers me a deeper understanding of how we relate to our test automation than any other question on coverage. This observation could be noted foremost on Client A, and in some aspect Client B as well.

Observation no2: Changed perception on data

In both Client A and B and because of seeing our systems as complex, we start to realize that just because we at one point in time obtained a verified test result, we still are unable to foresee the future, we can’t predict what the result will be when we test again. Hence, to establish a feeling of confidence we want to execute our most vital test automation checks with significantly higher frequency than ever before. I’m not talking about days, hours, I’m talking about each second or minute. If we don´t test, we will have no historical trend data to analyze. Working this way is also a natural consequence of seeing your system landscape as a complex creature. This way of building confidence through continuous and machine-driven checking will produce a significant amount of data to gather and handle in a controlled manner. Although, this data needs to be analyzed in a professional way. Data is not the answer to anything, it merely provides us with a way to see things clearer. Data offers us a chance to look at trends, causal relationships, discussing thresholds and confidence intervals. In short, data scientist’s capabilities will become an increasingly important capability in any development team that operates in any complex digital landscape.

Observation no3: Requirements and defects

As a natural consequence of the organic approach and seeing our system landscape as our patient to care for we start seeing requirements and defects from another perspective. From the perspective of something that is like a blueprint or a furniture assembly manual to follow step-by-step and any deviation from this as something scary – towards something more multifaceted, more diverse. We approach requirements as if it were desires, wishes, beliefs on what our patient wants to be capable of. And it is our job to cater for those dreams to come through because of our development. The source code that we produce holds the possibility to cure digital diseases, act as digital prosthetic arms to extend our patients’ reach, for our patient to reach a new level of digital happiness. But we also understand that all of our treatments cannot be successful. Hence, we start to see defect reports as observations reported about our patient and it is our job to enrich these observations with more information until we understand if and how to act upon them. Hence, we start to relate to these observations as something interesting when we start to put our pieces together to understand the big picture – a piece of art that is complex and constantly evolving. This transformation could be observed within Client B.

Observation no4: Quality Assurance

When we see our system landscape as our patient to treat and care for I´ve noticed a distinct paradigm shift in the way we approach quality assurance. Seeing quality as the absence of bugs is not merely enough – as if we would define individual wellbeing or happiness as the absence of diseases and medical diagnosis. And many can relate that this does not make any sense. As a matter of fact, the annual World Happiness Report sums up happiness in seven indicators per country: economic development, healthcare efficiency, social support, individual freedom of choice, generosity and absence of corruption and dystopia. Reference to report in comment. Also, process quality does not merely equal development speed, where it is apparent that the best doctor or hospital is not necessarily the one that is able to treat the most patients per time interval or the ones that prescribe the most medications. Slowly more and more individuals in the development teams start to raise the question how to find confidence as to our development is ‘safe and effective’. This will be the new QA narrative. Safe development could be enabled by CI/CD pipelines, automated deployment, infrastructure and all those components that we today relate to professional development. But the other question is – are our development efforts leading to the wanted effect? To ask questions like this is natural for any development team that is used to work in a complex environment. This effect could be observed in all three clients.

Observation no5: On the topic of tooling

Our relationship to the tools we use within software development and test is about to transform when we understand the level of complexity in which we operate in. From the idea that tools should perform our simplest and repetitive tasks for us – to a more assisting-centric approach. How can tools assist us in understanding our system landscape better, observe complex patterns, help us in make smarter judgments, remember things more accurate? This observation was most clearly detected at Client A and B. I often refer to how algorithms have helped medical doctors to make more accurate diagnoses from x-ray photos as an example, because the historical data has been gathered and analyzed for a reasonable amount of time. The same manner is observed in our own craft as we see more examples of how algorithms help is making better judgements in software engineering. Here is a collection of various application areas and tools where algorithms can help. But to start with, I see a clear trend that data platforms are incorporated into the toolset of testers like Splunk and ElasticStack in order to start getting some level of understanding on the bigger picture (I find this video from Splunk very informative). I´m glad to see that there are a couple of next generation testing tools called Test Orchestration platforms trying to address this need of getting an overview of the complexity through high frequency test scripts execution in combination with data gathering from various sources, like Lambdatest and their HyperExecute for example. Also, there is a new collaboration between Sogeti and Microsoft resulting in the SARAH (Scalable-Adaptive-Robust-AI-Hub) platform that I see a huge potential in for those working in the Microsoft Azure Devops Tooling ecosystem.

Observation no6: On the topic of planning

How do we approach planning in a truly complex setting? For simple tasks in history, traditional industry-inherited project models have served us good and in order to deliver complicated large development projects we have leaned towards agile methodologies for quite some time now. But what happens when development gets non-deterministic, when the outcome is impossible to predict and rules, guidelines, frameworks, and processes seems to provide no or very small value? This is what happens when we embrace complexity in development planning. When we understand that creating a plan for thousands of development items could instead be like trying to create a data model and algorithms that calculates and predicts number of patients in an intensive care unit? Because complex development endeavors are just like that – trying to look at each, complicated, development task as if it was a patient. When that development is set to DONE, it is just as if we would be sending that patient home again – with high likelihood to be re-admitted in the near future depending on the particular medical history. Exactly like software development when we know that some issues and tasks keep popping back. This observation was very tightly coupled to the discussions with Client C.

English | EN

English | EN