Imagine a super-smart robot that helps you with everything, from answering your questions to making cars drive themselves, and even helping doctors find cures faster. This is what Artificial Intelligence, or AI, is doing in our world right now. It’s like magic, changing how we live and work every day.

But here’s a little secret: all this magic needs a lot of power to run. Training these smart robots and keeping them working uses huge amounts of energy, which means more pollution and harm to our planet. As AI gets smarter and more common, its impact on the environment is growing, and we cannot just pretend it’s not there anymore. It’s a powerful tool, but one with a price we need to think about.

Why AI Energy Use Matters

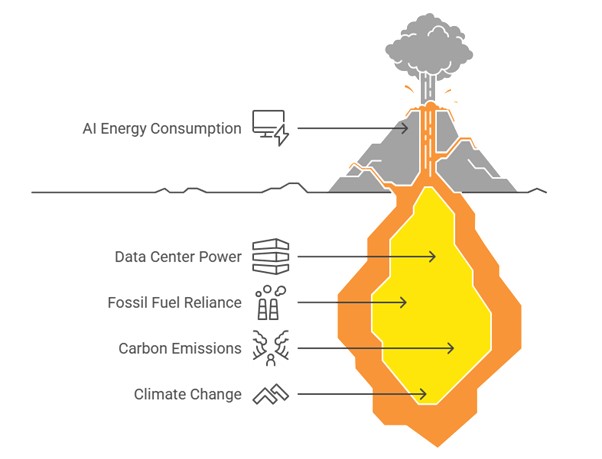

Suppose you are teaching a super-smart robot to think and talk like a human. To do that, you need a giant team of powerful computer brains called GPUs, all working non-stop day and night inside huge data centers. These data centers gobble up a massive amount of electricity, most of which still comes from burning fossil fuels, like coal and gas. Just training one big language robot can release as much carbon dioxide (CO2) into the air as several cars do over their whole lifetimes.

But it does not stop there. Once the robot is ready, it needs energy every time you use it whether it’s helping you talk to your voice assistant, picking what movies to watch, or even driving a self-driving car. As more people and businesses start using AI, the total energy it uses and the pollution it causes keeps growing. If we do not find smarter, cleaner ways to power AI, it could seriously harm our planet.

The Role of Optimization

Optimizing AI models to be more energy efficient means creating and using algorithms that work just as well -or even better- while using less computing power. This can include:

Model Compression: Methods like pruning, quantization, and knowledge distillation help make models smaller without losing accuracy, which reduces the amount of energy they use.

Efficient Architectures: Creating smart designs for neural networks that need fewer steps to work or that use only the most important parts of the data can greatly reduce the energy they use. By making the network simpler and more focused, we can save a lot of power while still getting great results.

Hardware-Aware Optimization: Adjusting AI models to work better on specific types of hardware like special computer chips can help them run faster and use less energy. By matching the model to the hardware, we get more done with less power.

Smart Training Strategies: Using clever methods like transfer learning and low-shot learning helps AI models learn faster with less data. This helps avoids repeating the same training steps, saving time, computing power, and energy.

Environmental and Economic Benefits

Making AI use less energy helps cut down on carbon pollution, which is great for fighting climate change. It also saves money, since running AI systems can be really expensive because of high energy costs. When AI is more energy-efficient, it becomes easier for more people to use it including regions where electricity access is limited. This helps make sure technology is shared more fairly around the world.

A Call to Action for the AI Community

Scientists, tech builders, and business leaders should focus on protecting the planet while creating new AI tools. Being open about how much energy AI projects use can help people understand the impact and make smarter choices. Using clean energy in data centers and building AI in more efficient ways can help technology grow without hurting the environment.

Conclusion

AI has the power to help solve big problems around the world, but we cannot ignore the effect it has on the environment. By making AI use less energy, we can enjoy its benefits while also taking care of the planet. It’s a smart way to advance without compromising nature.

References

[1] Patterson, D., Gonzalez, J., Le, Q., Liang, C., Munguia, L. M., Rothchild, D., … & Dean, J. (2021). Carbon Emissions and Large Neural Network Training. arXiv preprint arXiv:2104.10350.

[2] Li, H., Kadav, A., Durdanovic, I., Samet, H., & Graf, H. P. (2016). Pruning Filters for Efficient ConvNets. arXiv preprint arXiv:1608.08710.

[3] Han, S., Mao, H., & Dally, W. J. (2015). Deep Compression: Compressing Deep Neural Networks with Pruning, Trained Quantization and Huffman Coding. arXiv preprint arXiv:1510.00149.

[4] Schwartz, R., Dodge, J., Smith, N. A., & Etzioni, O. (2020). Green AI. Communications of the ACM, 63(12), 54-63.

English | EN

English | EN