I was led to believe, as many of us have been, that we live in the Information Age. And whilst there is little doubt of the proliferation of information witnessed since the birth of computing, the more recent arrival of artificial intelligence has thrown a cat amongst the pigeons in accurately defining the era.

For me there are two key factors which have contributed to the exponential growth defined as the Information Age:

- The advent of computers with increased storage and processing power to help expedite work.

- Increases in global population, meaning there are more people conducting work, investigating new topics and building on the knowledge of others.

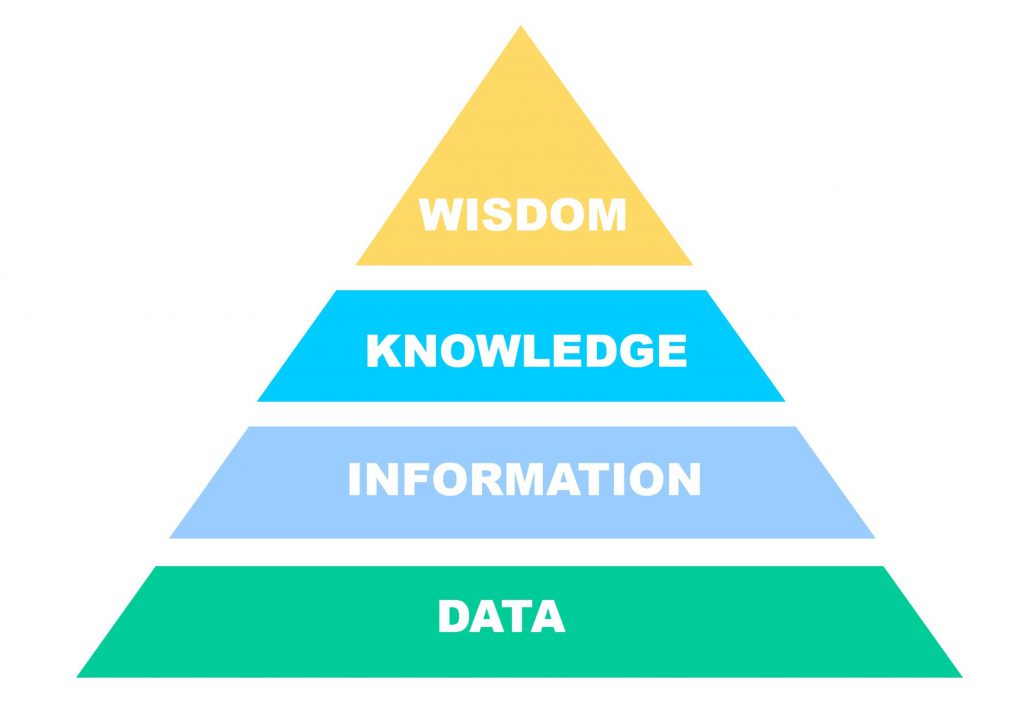

Recently I caught a repeat of “The Joy of Data” on BBC4, presented by Hannah Fry. In this particular episode the following picture was presented, proposing that data leads to information, which leads to knowledge, and finally this in turn leads to wisdom.

For a period of time it did seem we maybe were living in the Information Age, with large Information Systems enabling us to make new observations. And yet I would argue that we are, in fact, getting ahead of ourselves and we are still living in the Data Age.

So far we have created systems which store lots of data, and we have been constantly improving the information we can derive from those systems. With the advent of artificial intelligence comes the ability to draw new inferences from data. But AI is trained by consuming data, and once trained it performs better the more data it has. So to make AI successful we need to go back to our data and clean it up, for want of a better phrase, so our new and shiny AI engines will be able to work and provide us new, insightful information.

If we then put this into a longer term view, there is another challenge which for me has four key aspects to it:

- Let’s start with Moore’s Law, which is the observation that the number of transistors in a dense integrated circuit (IC) doubles about every two years. Over time we are able to process an ever-increasing amount of data.[1]

- Computer storage has increased and we can store much more data.

- At the same time we have seen significant global population growth, meaning there are more people engaged in capturing and processing data (Perhaps helping prove Thomas Watson wrong[2]?)

- Finally we now have many more ways to collect data, such as new sensors, smart phones and smart watches. So the sources of data available to us have grown.

And yet we remain in the data age as I believe we are still trying to sort all that information out, to make it useful to generate information and perhaps to enhance our knowledge. There is a wonderful clip in the Simpsons Movie where a famed Hollywood plot of the Government listening to everything is parodied by showing a warehouse of people monitoring all calls, and finally someone is able to stand up and declare “We got one!!!”. For all the processing power available, we’re still not turning most of that data into information.

The reality is the volumes of data are still growing exponentially, and we are still scratching the surface of processing this into information we can use. Such as in the case of Stephen Crohn, whose friends started to fall terminally ill in around 1978. And yet it was not until 1996 that immunologist Bill Paxton discovered why, identifying a genetic mutation which prevented HIV from binding to the surface of Crohn’s white blood cells. This discovery paved the way for the development of anti-retroviral drugs.

And as we exist now in the throes of another pandemic, Stephen Crohn remains a cornerstone of research as scientists looks for similar outlier patients for Covid-19. The advantage now is twofold: we have a little nugget of information to guide our search; and we have the processing power to churn through vast quantities of data and shorten the reseach period. Whilst this case highlights a recent success, historically the process of identifying suitable candidates might have taken years or even decades and there is a seemingly unending list of topics to explore. Jason Bobe, a geneticist at the Icahn School of Medicine in New York, has been working towards tackling Covid-19 since June 2020 using Facebook groups, looking to sequence the genomes of asymptomatic patients. And the news is some clues have already been found. A process which had taken 18 years for HIV is now progressing in under one year with the aid of a little information and knowledge, and an increased pool of data.

We are, however, approaching a point in time when that sort of real time processing is possible, through the advent of AI and quantum computing. A time when the Data Protection Act and GDPR will become ever more important to protect our privacy. A time when the future could take on the form of one of the dystopian futures Hollywood has “warned” us about?

So whilst we are now in the Data Age, we must be prescient of the future. This is why we at Sogeti are not just developing AI solutions but we are ensuring these solutions will adhere to our core values and our ethical beliefs.

[1] Moore’s Law is currently hitting a peak in terms of transistor density and it’s now more likely a new technology will provide the next breakthrough in processing power.

[2] Thomas John Watson Sr. (February 17, 1874 – June 19, 1956), CEO of IBM.

English | EN

English | EN