The amount of software systems that are using artificial intelligence (AI) and in particular machine learning (ML) is increasing. AI algorithms outperform people in more and more areas, causing risk avoidance and reducing costs. Despite the many successful AI applications, AI is not yet flawless. In 2016, Microsoft introduced a Twitter bot called Tay. It took less than 24 hours before Tay generated racist tweets because unadulterated sent ultra-right examples to the Twitter bot, on which end Microsoft decided to take the bot offline. This example is not yet in a critical domain, but AI algorithms are being implemented in increasingly risky environments. Applications in healthcare, transport, and law have major consequences when something goes wrong. In addition, the more AI applications, the more influence this has on people’s daily lives. Examples of critical implementations are a self-driving car that should make a decision in a complex traffic situation or a cancer diagnosis that has to be made. People make mistakes more often, so why is it so critical when an AI application makes a mistake? The difference between humans and AI algorithms is that a person can explain an error. This does not apply to AI algorithms. Even the developer of the algorithm cannot explain why a certain choice has been made. This is also called the “black box” problem: people will not trust AI until they know how it came to a decision. Opening the “black box” is necessary to find out why an algorithm makes a certain decision. In addition, it is also important which data is collected and how this data is used by the algorithm. Which data is used or not and how is it determined? The answers to these questions are particularly important to GDPR privacy regulations. The GDPR contains the right to explanation, which means that an institution or company must be able to give a reasonable explanation of an AI decision or risk assessment. However, black box AI models cannot be explained and therefore the application of eXplainable AI (XAI) is necessary. When assessing the quality of an information system the ISO25010 model of characteristics of product quality is applied. In the book “Testing in the digital age; AI makes the difference”, which was published by Sogeti in 2018, we have added a few quality characteristics specifically for systems that include AI. One of these quality characteristics is “Transparency of choices” which can be achieved by XAI. Applying XAI is not necessary for all AI applications. For example, if an AI algorithm suggests a wrong movie on Netflix, the result is that you are watching a boring movie. It is not necessary to know why Netflix has suggested the wrong movie. As soon as there are social, political, legal or economic consequences associated with the decisions made by an AI algorithm, the more necessary transparency and clarification becomes. What is XAI? XAI (eXplainable Artificial Intelligence) is a machine learning technology that can accurately explain a prediction at an individual level, so that people can trust and understand it. XAI is not a replacement for the “black box” but an addition to the model. This technology provides the following insights:

- How data sources and results are used;

- How the input for the model leads to the output;

- The strengths and weaknesses of the model;

- The specific criteria that the model uses to make a decision;

- Why a certain decision is made, and which alternative decisions it could have made;

- To which type of errors the model is sensitive;

- How to correct errors.

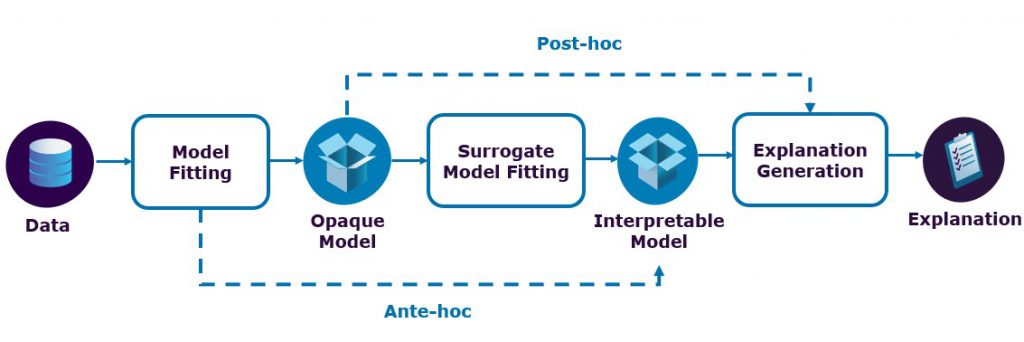

Ante-hoc technique

An example of an ante-hoc technique is Bayesian Deep Learning (BDL). With BDL the degree of certainty of predictions by the neural network is displayed. Weight distributions are assigned to the different predictions and classes. This provides insight into which characteristics lead to which decisions and their relative importance.

Post-hoc technique

An example of a post-hoc technique is Local Interpretable Model-Agnostic Explanations (LIME). LIME is mainly used for image classification tasks and works with the help of a linear interpretable model. To find out which parts of the input contribute to the prediction, we divide the input into pieces. When interpreting a photo, the photo is divided into pieces and these “disturbed” pieces are exposed to the model again. The output of the model indicates which parts of the photo influence the correct interpretation of the photo. In addition, insight is gained into the reasoning behind the algorithm decisions.

How is Sogeti implementing XAI?

The Testing^A.I. team of Sogeti in the Netherlands implements XAI as an audit layer in Machine Learning models. This audit layer ensures transparency of the model so that the GDPR guidelines are met. XAI can be applied for speech, text and image recognition. The implementation of XAI is part of the end-to-end AI solution which Sogeti offers. Together with our customers, we strive for reliable and transparent AI solutions.

This article was written by Anouk Leemans. Anouk is an Agile Test Engineer and a member of the Testing^A.I. team of Sogeti’s Digital Assurance & Testing practice in the Netherlands.

Rik Marselis reviewed the article.

Ante-hoc technique

An example of an ante-hoc technique is Bayesian Deep Learning (BDL). With BDL the degree of certainty of predictions by the neural network is displayed. Weight distributions are assigned to the different predictions and classes. This provides insight into which characteristics lead to which decisions and their relative importance.

Post-hoc technique

An example of a post-hoc technique is Local Interpretable Model-Agnostic Explanations (LIME). LIME is mainly used for image classification tasks and works with the help of a linear interpretable model. To find out which parts of the input contribute to the prediction, we divide the input into pieces. When interpreting a photo, the photo is divided into pieces and these “disturbed” pieces are exposed to the model again. The output of the model indicates which parts of the photo influence the correct interpretation of the photo. In addition, insight is gained into the reasoning behind the algorithm decisions.

How is Sogeti implementing XAI?

The Testing^A.I. team of Sogeti in the Netherlands implements XAI as an audit layer in Machine Learning models. This audit layer ensures transparency of the model so that the GDPR guidelines are met. XAI can be applied for speech, text and image recognition. The implementation of XAI is part of the end-to-end AI solution which Sogeti offers. Together with our customers, we strive for reliable and transparent AI solutions.

This article was written by Anouk Leemans. Anouk is an Agile Test Engineer and a member of the Testing^A.I. team of Sogeti’s Digital Assurance & Testing practice in the Netherlands.

Rik Marselis reviewed the article.

English | EN

English | EN