IN BOTS WE TRUST

August 24, 2018

We know we can’t trust bots.

What can we do to trust them?

Can other bots help us what that?

Continued from Part 2 – How Bots Learn, Unlearn, and Relearn

Test Driven Development

TDD (Test Driven Development) is a development method that moves the developers focus from the code to the design of the solution.

TDD requires the developer to first write a test case as unit test, then write the code to pass the test case. Then another test, then another piece of code, then another test, etc. The test case can be analyzed without reading any code and therefore the developer can validate and improve the design of the solution, without thinking about the complex code.

Whenever a test case is changed, the code will have to be changed, for the test case to pass. Therefore, it is “Test Driven” development.

Develop and govern bots with TDD

TDD is perfect for bot’s based on reinforcement learning. The developer will still create the required unit tests as KPI on the QA-layer, and the bot will configure itself, without the developer have to change any code. The developer will only have to add another sensor (input) or motor (output) and some KPI’s.

Darwin vs. Newton

Using TDD on bots would be a Darwinian approach to software development. TDD will improve the bots from version to version, by guiding the bots evolution with KPI’s.

This contrasts with the traditional Newtonian development method (the waterfall method), where the universe is perceived as a mechanical clock: “if you know enough details, then you will be able to predict anything”. The Newtonian approach gives the ability to understand “how” things happen, while the Darwinian only give the ability to understand “if” things happen.

Let me give an example:

A ticket selling application, sometimes makes an error and sells tickets with a negative price value.

- If this application was developed with the Newtonian approach, then the source code can be debugged to find out “how” the ticket price became negative. This error can then be fixed.

- If this application was developed with a complex bot, then nobody would be able to understand and therefore debug the source code. A KPI could have been set to measure “if” the price would become negative, so it can correct the bot to not do that again. Nobody would understand “how” the negative price became negative, only “if” it became negative and “if” it has been handled.

The Newtonian method seems superior in the example above, but that is only for simple problems. Bug fixing in very complex IT-systems can become a nightmare. Solving a bug, might re-introduce an old one or create a new one. Combining the Newtonian approach with TDD is defined as TDRL (Test Driven Reinforcement Learning). This won’t solve all problems, since a bot might do something unexpected, but a KPI can be placed, in order to prevent this from happening again. For example: If TDRL bot is used to find cancer in lungs from images, then a KPI could be set for the bot to delearn and relearn from the new data set. If the bot is not able to map the input and the outputs, then it could sound an alarm, that it requires more sensors (inputs) or human input to solve the problem. In a larger scale, the bot could request help from court or the government to solve problems in a democratic way. It would also create precedent for future problems.

In a way TDRL would be even more agile than agile is today!

If we trust the bots, then we might get the opportunity to understand them.

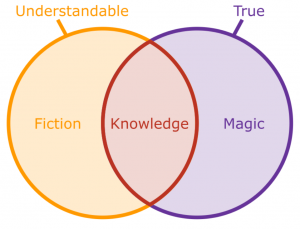

Any concept is either:

- understandable by humans or not understandable by humans

- true or not true.

A combination of “understandable” and “true” can give the following categories:

- Fiction or fantasy: Understandable, but not true.

- Knowledge: Understandable and true.

- Magic: Not understandable, but still true.

Complex machine learning bots goes into the magic category. We can only know “the things the bots do” and not “how the bots do it”, and therefore can’t trust them.

Maybe one day we will get a bot (in a future version of Cognitive QA), that will be able to explain to us mortal humans “how the bots do it” – and maybe the answer will be… 42.

Testing in the digital age is a new Sogeti book that brings an updated view on test engineering covering testing topics on robotics, AI, block chain and much more. A lot of people provided text, snippets and ideas for the chapters and this article (part 3 of 3) is my provision for the book, covering the chapter robotesting (with a few changes to optimize for web).

The book Testing in the digital age can be found at: https://www.ict-books.com/topics/digitaltesting-en-info

English | EN

English | EN