Prior to Docker apps were hosted on virtual machines or bare-metal. These had fewer moving parts and handling security was relatively less complicated. With Docker there are many moving parts, hence securing Docker environment has become more complicated.

Scope of this blog:

- Take a look at different vulnerable aspects within Docker

- Demonstrate an exercise to implement one of the best practices that helps secure your Docker environment

Moving parts within Docker that are to be taken care of are as follows:

Your containers. You probably have multiple Docker container images, each hosting individual microservices. You probably also have multiple instances of each image running at a given time. Each of those images and instances needs to be secured and monitored separately.

The Docker daemon, which needs to be secured to keep the containers it hosts safe.

The host server, which could be bare metal or a virtual machine.

Overlay networks and APIs that facilitate communication between containers.

Data volumes or other storage systems that exist externally from your containers.

Analysis of a few Best Practices which will help run your Docker safely:

Resource Quota: Allocate the right resource quota for your container

Below is a small exercise done to allocate memory to the container. Failure in the allocation of the right memory leads to Out of Memory Exception under different scenarios. Tools used for this exercise:

- Pumba: Tool for stress loading the container, internally this tool uses stress-ng

- Docker documentation on memory allocation

- Azure VM DS1 V2 (1VCPU, 3.5 GB Memory)

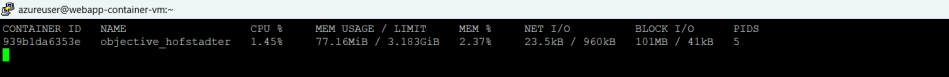

Snapshot of a container running a Python application

Snapshot of the container stats: As can be seen, if Docker is run without memory allocation, the default memory limit that the container takes is the host memory. In this case the memory limit displayed is 3.18 GB

Stress loading the container with below command

Drilling down on the parameters passed in the command:

–vm N: start N workers continuously calling mmap and writing to the allocated memory (in the current scenario mmap is used map memory into the address space of a process)

–vm-bytes N: mmap N bytes per vm worker

–vm-hang N: sleep N seconds before unmapping the memory

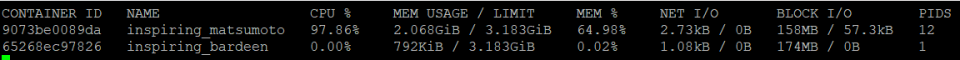

Snapshot of the stats shows that the container blocks more memory than its actually requires

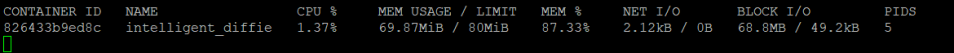

Running the same container by allocating memory of 80MB

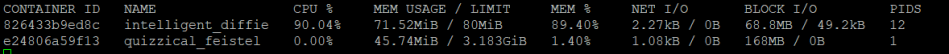

Stress loading the container with the same parameters, stats show that the container is well within the required allocation

Conclusion: By keeping the right resource quotas your Docker environment is efficient. This practice prevents a single container from consuming too many system resources. This also enhances security by blocking attackers that seek to disrupt services by blocking a large number of resources.

Avoid running your container as a root user

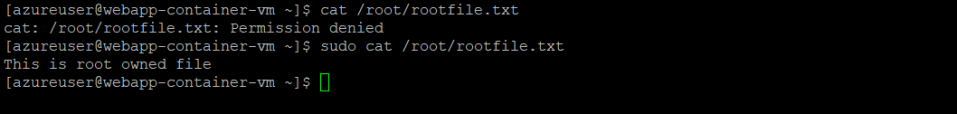

To demonstrate the vulnerability, consider a file owned by root user which is present in /root directory

This file can only be accessed by root user or a sudo user with root privileges.

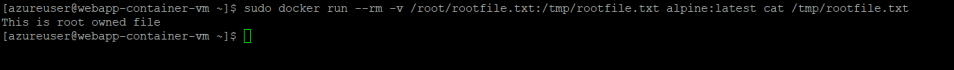

Running a container as root gives you access to the file owned by root

Similarly, one could mount the entire host filesystem in the container and read and write any file in the same manner.

Remedy to this issue is create a user and add the user to Docker group. After that, switch to that user and run your Docker commands.

English | EN

English | EN