As Generative AI (Gen AI) becomes central to enterprise transformation, the need for robust, scalable, and intelligent quality engineering strategies becomes critical. Traditional testing methods fall short when applied to probabilistic systems like applications integrated with Gen AI.

Need to go beyond standard quality engineering as Ge nAI systems are probabilistic systems

- Generate multiple valid outputs for the same input

- Learn and evolve based on training data and fine-tuning

- Are sensitive to prompt structure, token limits, and context windows

These characteristics introduce challenges like output inconsistency, hallucinations, and excessive verbosity—making regression testing, automation, and validation significantly more complex.

At Sogeti, we’ve gone beyond surface-level validation to develop a deep architecture-focused quality engineering & testing framework—one that ensures Gen AI systems are providing satisfactory functional output are trustworthy, scalable, and production-ready. We engineer quality frameworks for Gen AI that enable faster innovation, reduced risk, and stronger competitive advantage.

We pioneered Architecture-Focused AI Testing

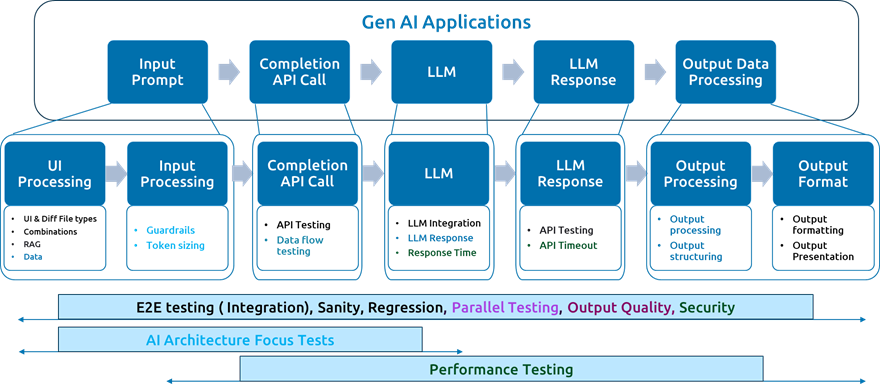

What sets our approach apart is our deep integration with the AI system’s architecture. We don’t just test outputs—we test the entire Gen AI pipeline, from data ingestion to output generation, across multiple layers of the system.

Our systematic approach for Testing Gen AI based systems

Key Pillars of Our Testing Strategy

- AI Architecture Testing

- We validate end to end Gen AI based applications from input processing, guardrails, LLM integration, and output APIs & output processing.

- Our tests cover both functional and non-functional aspects, ensuring the system behaves predictably under varied conditions.

- Guardrail & Token Testing

- We’ve developed systematic approach, multiple test cases to validate output safety, length, profanity, and format etc.

- Token testing ensures the system handles maximum input sizes without degradation.

- Comparative & Parallel Testing

- We came up with systematic way to rate output. Our 10-point rating system help to analyse quality of output across various LLMs. We benchmark outputs across multiple Gen AI platforms under similar conditions.

- It even rate quality of prompt. This helps clients choose the most suitable model for their use case, based on objective quality metrics.

- Data Flow & Data Variation Testing

- We validate referential integrity across use cases, scenarios, and prompt chains.

- Our multilingual and format-diverse test data ensures the system performs reliably across languages and edge cases.

- Output Quality Testing

- We use a framework to assess consistency, correlation, and semantic accuracy of outputs.

- Both objective and subjective test cases are used to simulate real-world usage.

- Automation & Performance Testing

- Our Selenium-based automation suite covers sanity, regression, and E2E flows.

- Performance testing is embedded into CI/CD pipelines, with API-layer load testing and real-time monitoring.

Confidence through engineering rigor

Our testing strategy is well beyond finding bugs. Our Quality Engineering approach is about building confidence.

We’ve applied this strategy across 40+ enterprise Gen AI use cases, continuously refining our methods based on real-world feedback and evolving model behaviour.

We even deployed automated sanity, regression for priority use cases.

Conclusion: Engineering Trust in GenAI

As enterprises scale their GenAI adoption, we must evolve from reactive testing to proactive quality engineering. Our approach ensures that GenAI systems are giving quality output with consistency and reliability.

If you’re ready to move beyond experimentation and scale Gen AI into production with a structured, results-driven approach, get in touch to learn how our proven solutions can support your journey.

English | EN

English | EN