Generative AI is reshaping business operations and customer engagement with its autonomous capabilities. However, to quote Uncle Ben from Spiderman: “With great power comes great responsibility.”

Managing generative AI has been challenging as generative AI models are outperforming humans in some areas, such as profiling for national security causes. Sometimes, anti-principles clearly explain why ethics must be enforced, so it is important to understand the following challenges:

- Generative AI can assist in managing information overload by helping extract and generate meaningful insights from large volumes of data but, at same time, information overload can dilute precise messaging.

- A lack of domain-specific knowledge or context leads to inaccurate information and contextual errors in addition to bias and subjectivity.

- There may be limited human resources to oversee training and regulate output, due to a lack of experienced personnel.

- Stale data may be used in training.

- Elite and/or not always ethically sourced data may be used for training.

- There may be a lack of resilience in execution.

- Scalability and cost tradeoffs may cause organizations to consider a shortcut.

Although complex, these challenges can be alleviated on a technical level. Monitoring is a good example of ensuring robustness and observability of the behavior of these models. Additionally, since generative AI capability is exposing businesses to new risks, there is a need for well-thought-through governance, guardrails, and the following methods:

- Model benchmarking

- Model hallucination

- Self-debugging

- Guardrails.ai and RAIL specs

- Auditing LLMs with LLMs

- Detecting LLM-generated content

- Differential privacy and homomorphic encryption

- EBM (Explainable Boosting Machine)

It is crucial that generative AI design takes care of the following aspects of ethical AI:

- Ensuring ethical and legal compliance – Generative AI models can produce outputs that may be biased, discriminatory, or infringe on privacy rights.

- Mitigating risk – Generative AI models can produce unexpected and unintended outputs that can cause harm or damage to individuals or organizations.

- Improving model accuracy and explainability – Generative AI models can be complex and difficult to interpret, leading to inaccuracies in their outputs. Governance and guardrails can improve the accuracy of the model by ensuring it is trained on appropriate data and its outputs are validated by human experts.

- Ethical generative AI approaches need to be different based on the purpose and impact of the solution, so diagnosing and treating life-threatening diseases should have a much more rigorous governance model than using generative AI to give marketing content suggestions based on products. Even the upcoming EU AI Act prescribes risk-based approaches, classifying

- AI systems into low-risk, limited or minimal risk, high-risk, and systems with unacceptable risk.

- AIs must be designed to say “no,” a principle called “Humble AI.”

- Ethical data sourcing is particularly important with generative AI, where the created model can supplant human efforts if the human has not granted explicit rights.

- Inclusion of AI: most AIs today are English-language only or, at best, use English as a first language.

USING SYNTHETIC DATA FOR REGULATORY COMPLIANCE

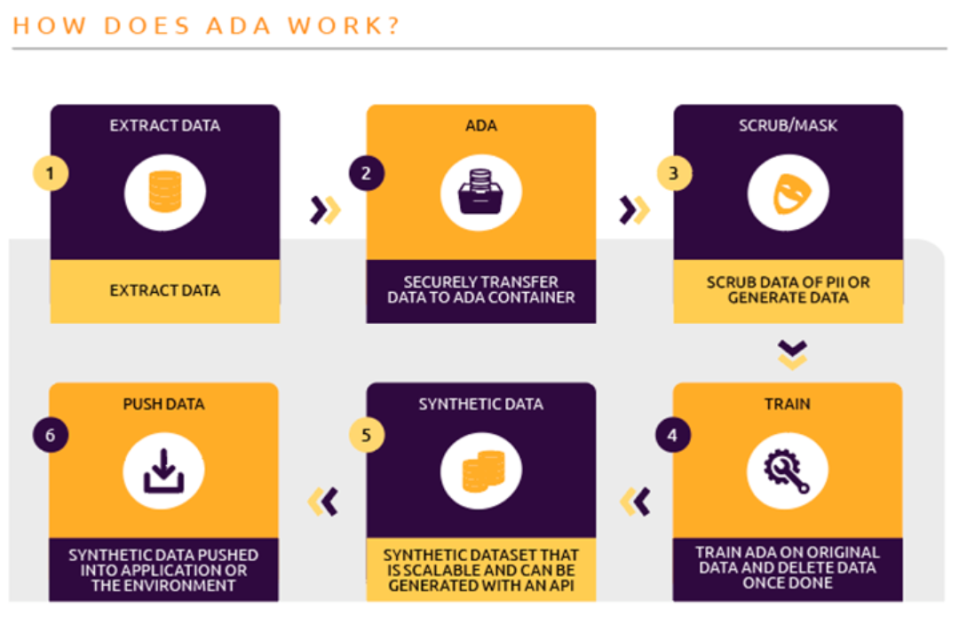

Försäkringskassan, the Swedish authority responsible for social insurance benefits, faced a challenge in handling vast amounts of data containing personally identifiable information (PII), including medical records and symptoms, while adhering to GDPR regulations. It needed a way to test applications and systems with relevant data without compromising client privacy. Collaborating with Försäkringskassan, Sogeti delivered a scalable generative AI microservice, using generative adversarial network (GAN) models to alleviate this risk.

This solution involved feeding real data samples into the GAN model, which learned the data’s characteristics. The output was synthetic data closely mirroring the original dataset in statistical similarity and distribution, while not containing any PII. This allowed the data to be used for training AI models, text classification, chatbot Q&A, and document generation.

The implementation of this synthetic data solution marked a significant achievement. It provided Försäkringskassan with realistic and useful data for software testing and AI model improvement, ensuring compliance with legal requirements. Moreover, this innovation allowed for efficient scaling of data, benefiting model development and testing.

Försäkringskassan’s commitment to protecting personal data and embracing innovative technologies not only ensured regulatory compliance but also propelled it to the forefront of digital solutions in Sweden. Through this initiative, Försäkringskassan contributed significantly to the realization of the Social Insurance Agency’s vision of a society where individuals can feel secure even when life takes unexpected turns.

MARKET TRENDS

The market for trustworthy generative AI is flourishing, driven by these key trends.

- Regulatory compliance: Increasing government regulations demand rigorous testing and transparency.

- User awareness: Growing awareness among users regarding the importance of trustworthy and ethical AI systems.

- Operationalization of ethical principles: Specialized consulting to guide AI developers in creating ethical risk mitigations on a technical level.

RESPONSIBLE USE OF GENERATIVE AI

Ethical considerations are at the heart of these groundbreaking achievements. The responsible use of generative AI ensures that while we delve into the boundless possibilities of artificial intelligence, we do so with respect for privacy and security. Ethical generative AI, exemplified by Försäkringskassan’s initiative, paves the way for a future where innovation and integrity coexist in harmony.

“ETHICAL GENERATIVE AI IS THE ART OF NURTURING MACHINES TO MIRROR NOT ONLY OUR INTELLECT BUT THE VERY ESSENCE OF OUR NOBLEST INTENTIONS AND TIMELESS VALUES.”

INNOVATION TAKEAWAYS

TRANSPARENCY AND ACCOUNTABILITY

Generative AI systems should be designed with transparency in mind. Developers and organizations should be open about the technology’s capabilities, limitations, and potential biases. Clear documentation and disclosure of the data sources, training methods, and algorithms used are essential.

BIAS MITIGATION

Generative AI models often inherit biases present in their training data. It’s crucial to actively work on identifying and mitigating these biases to ensure that AI-generated content does not perpetuate or amplify harmful stereotypes or discrimination.

USER CONSENT AND CONTROL

Users should have the ability to control and consent to the use of generative AI in their interactions. This includes clear opt-in/opt-out mechanisms. Respect for user preferences and privacy and data protection principles should also be upheld.

English | EN

English | EN