Intent classification is crucial for many AI systems, such as conversational bots, as it helps them understand and respond appropriately to user queries. Traditional methods like random forest, logistic regression, RNN, CNN, etc. have advanced intent classification but often struggle with the accuracy and complexities of natural language, such as ambiguity and context. The Hybrid Retrieval-Augmented Generation (RAG) approach overcomes these challenges by combining the strengths of both retrieval-based and generative models, providing a more delicate, simple and adaptable solution for intent classification.

Three key aspects developers focus on while solutioning intent classification:

- Rapid implementation

- Ease of maintenance and cost efficiency

- Achieving optimal accuracy

Let’s explore how this approach addresses each aspect effectively

Challenges with traditional intent classification

While traditional intent classification models work satisfactorily in structured environments, they face several challenges in any real-world or complex environment. These models often rely heavily on pre-labeled data and predefined features, limiting their ability to understand the nuances of language. Additionally, as user queries evolve, traditional models often struggle to keep up, requiring extensive retraining to maintain accuracy. Ambiguity in user input further complicates the task, leading to potential misclassifications and poor user experiences.

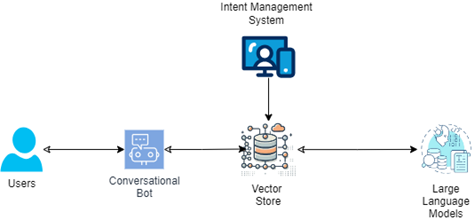

Detailed Architecture – Hybrid RAG approach for intent classification

The workflow could start by ingesting the intents, entities, and relevant utterances. These inputs should be embedded and stored as vectors in the vector store, forming the foundation of an Intent Management System. This system will also facilitate the management of intents, entities, and utterances. When a user submits a query, it is first searched in the vector store using cognitive techniques, followed by validation with an LLM model through a well-crafted prompt. The responses from the AI search and the LLM are then evaluated against the confidence score to determine the final, accurately identified intent. Let’s explore its comprehensive implementation in more detail.

To fully appreciate the benefits of the Hybrid RAG approach, it’s essential to understand its architecture and how each component contributes to better intent classification.

- Vector Store. The vector store is the backbone of the Hybrid RAG approach. It contains:

- Tagged utterances: These are sample user queries tagged with their corresponding intents (with descriptions), categories, and entities.

- Detailed descriptions: For each intent, a detailed description generated by Large Language Models (LLMs) is stored, adding more context for classification.

- Document summaries: When utterances aren’t available, summaries of relevant documents are stored, allowing the system to classify intents based on the content of these documents.

- LLM-generated tags. A key feature of Hybrid RAG is the use of LLMs, which generate:

- Detailed intent descriptions: These descriptions provide a deeper understanding of each intent, capturing details that traditional models might miss.

- Document summaries: LLMs generate summaries of documents that are stored in the vector store, helping with better matching of user queries.

- Query mechanism. When a user submits a query, it is processed through the following steps:

- Vector conversion: The query is converted into a vector representation.

- Vector matching: This vector is matched against the stored vectors in the vector store, identifying the most relevant intents or documents.

- Similarity scoring: Similarity scores are calculated to determine the best match. The LLM is then leveraged to confirm the intent by analyzing the vector match and similarity score. If needed, the LLM can refine the classification by considering additional context or patterns.

- Intent confirmation: The LLM validates the identified intent by cross-checking the match score and the context, ensuring a higher level of accuracy in classification.

- Response generation. Once the relevant intent is confirmed, the system generates a response by:

- Contextual relevance: Using detailed descriptions and entity lists stored in the vector store, the response is customized to fit the specific context of the query.

- Dynamic adaptation: The system continuously adapts its responses based on evolving language and intent patterns, improving its effectiveness over time.

Reference vector store schema

Why Hybrid RAG outperforms traditional intent classification

Hybrid RAG offers several advantages over traditional intent classification models, making it a better choice for complex and changing environments:

- Nuanced understanding of language

- Traditional models often rely on predefined features or statistical patterns, which can miss the subtleties of natural language. Hybrid RAG, however, uses detailed descriptions, summaries, and tagged utterances, allowing it to understand and classify intents more accurately.

- Adaptability to new queries

- In environments where language and user queries are constantly changing, traditional models can struggle to adapt. Hybrid RAG, with its LLM-generated tags and document summaries, is more adaptable and can handle new, unseen queries effectively.

- Robustness against ambiguity

- Ambiguous user queries can lead to misclassifications in traditional models. Hybrid RAG’s vector matching, supported by detailed descriptions and summaries, enables it to handle ambiguity more effectively, leading to more accurate classifications.

- Enhanced accuracy with less data

- Traditional models typically require large amounts of labeled data to achieve high accuracy. Hybrid RAG, by storing detailed intent descriptions and document summaries, can achieve superior results even with limited data, making it a more efficient solution.

Seamlessly Evolve and Maintain

The Hybrid RAG approach has the potential to evolve further at ease. This enables organizations to maintain such system seamlessly with minimal human intervention.

One can simply develop basic interfaces to manage and maintain new intent – utterances, entities and descriptions. Additionally, with this approach it also benefits in adaptability by:

- Real-Time adaptation

- As language patterns change, Hybrid RAG could be enhanced to adapt in real-time, continuously updating its vector store with new utterances, descriptions, and summaries, thereby improving its accuracy and relevance over time.

- Integration with other AI systems

- Hybrid RAG could be integrated with additional AI systems, such as sentiment analysis or context-aware systems, to offer even more nuanced and effective intent classification, leading to more personalized and contextually relevant interactions.

- Advanced LLMs

- As LLMs advance, Hybrid RAG can leverage these more sophisticated models to generate even more detailed and accurate descriptions and summaries, further enhancing its performance.

Hypothetical use cases and implementation

To understand the real-world implications of Hybrid RAG, let’s explore a few hypothetical use cases where presumably this approach implemented, leading to significant improvements in intent classification accuracy.

Use case 1: Customer Support Chatbot Enterprise

“If an organization has implemented a customer support chatbot using traditional intent classification models, it may frequently misclassify queries related to complex technical issues. This can result in customer frustration and increased manual intervention.”

Implementation of Hybrid RAG:

- The organization transitioned to a Hybrid RAG approach, storing common user queries and detailed descriptions of technical issues in a vector store.

- The chatbot will then able to match user queries more effectively, even when the language used was ambiguous or complex.

- As a result, the accuracy of intent classification cloud improved by 25%, and the need for manual intervention dropped by 40%.

Use case 2: Legal document analysis tool

A legal firm using a document analysis tool to classify and summarize legal documents. However, the traditional classification model struggled with new legal terms and evolving case law, leading to inaccurate summaries.

Implementation of Hybrid RAG:

- The firm used LLMs to generate summaries for existing legal documents and stored them in a vector store alongside related case law and statutes.

- By using Hybrid RAG, the tool could match new documents with relevant summaries, even when unfamiliar legal terms were used.

- This led to a 30% improvement in the accuracy of document classification and summarization, significantly aiding the legal team’s efficiency.

Use case 3: E-commerce Product Recommendation System

An e-commerce platform relied on intent classification to recommend products based on user queries. However, the traditional model often failed to understand user intent, especially with vague or complex queries, resulting in poor product recommendations.

Implementation of Hybrid RAG:

- The platform adopted Hybrid RAG, storing product descriptions, customer reviews, and intent-related summaries in a vector store.

- The recommendation system was then able to match user queries with more relevant products, even when the queries were unclear or multi-faceted.

- The accuracy of product recommendations improved by 20%, leading to higher customer satisfaction and increased sales.

Conclusion

Hybrid Retrieval-Augmented Generation (RAG) represents a significant advancement in intent classification for conversational AI. By integrating vector stores, LLMs, and a robust query mechanism, Hybrid RAG offers a more nuanced, accurate, and adaptable approach to understanding user intents. While achieving 100% accuracy is subjective, this solution has significantly improved and brings us closer to perfection compared to traditional approaches.”

Compared to traditional classification models, Hybrid RAG provides superior performance, particularly in dynamic environments where language is constantly evolving. As the field of conversational AI continues to grow, Hybrid RAG offers a promising future for achieving greater accuracy and effectiveness in AI-driven conversations.

For organizations seeking to improve their intent classification mechanisms, Hybrid RAG presents a compelling alternative to traditional models, offering better accuracy, adaptability, and robustness.

English | EN

English | EN