CICD FOR A CLOUD NATIVE MICROSERVICES SYSTEM ON AZURE.

January 24, 2017

A flexible, cheap and innovative business are goals companies have. Moving to the cloud, change the way of working and make the systems more flexible are 3 drivers to reach this goal.

Three forces are pushing the continues DevOps evolution: The Cloud Platform force, The System Architecture force and the Collaboration Force.

3 forces and 5 tips to stay relevant on changing DevOps.

The 12-factor methodology describes practices for realizing and running cloud-native systems. Practices described are supporting these business goals.

… a triangulation on ideal practices for app development, paying particular attention to the dynamics of the organic growth of an app over time, the dynamics of collaboration between developers working on the app’s codebase, and avoiding the cost of software erosion.

Cloud native, microservices and pipelines principles.

Next to practices covering CICD pipelines, the 12 factors also describe practices for systems. Practices like -Treat backing services as attached resources- and -Export services via port bindings. There are more practices and patterns to flow for cloud-native and for services systems.

Cloud native, although this term seems to be hijacked by Pivotal and an organization who promotes containers it is not only restricted to these technologies. Cloud native systems are born in the cloud and comply with the cloud rules. As Gartner describes:

… a solution with elastic scalability metered consumption and automation…

How to Architect and Design Cloud-Native Applications

Services.

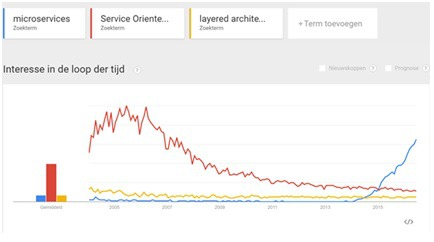

Microservices are smoking hot. The big question in software development is about dependencies, how to break down systems in independent modules so development, run, versioning, updating etcetera is easier. Microservices as the word already says, makes things smaller.

Services follow principles like Products, not Projects, Business Functionality, Decentralized Governance, Business Ownership, Own data, Replaceable, Evolve independent, Automated, No coupling.

On the website of Martin Fowled, a Microservices Resource Guide can be found a good read is also Microservices (aka micro applications).

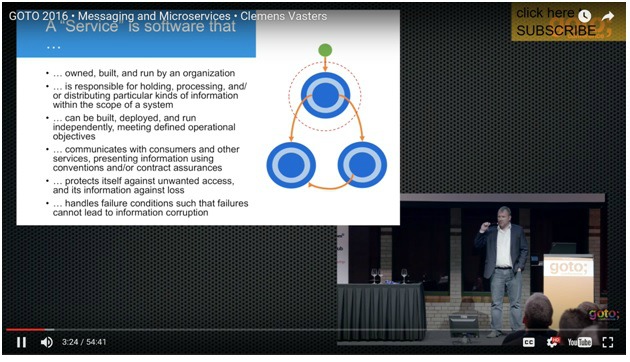

I like this presentation (messaging and microservices) from Clemens Vasters with insights on how Azure is designed with services valuable.

Pipelines. Reduced cycle time, early feedback, increased predictability, lower delivery risks and more are the benefits and goals of a good release pipeline. Some general release pipeline principles are:

- High quality by default.

- Validation and build breaking activities on every level

- All versioned.

- Including infrastructure, packages, configuration and application sources.

- Work in small batches.

- Executed together with bits

- No manual activities

- Automate repetitive tasks.

- Automation is immutable.

- Continuous improvement.

- The pipeline is never done.

- Everyone is/ feels responsible.

- All team members can release to production.

- For development and for operations

- Transparency

- Transparency in the process

- Traceability from idea to running bits

- Feedback fills the backlog.

- Feedback on running systems incorporated by default.

- Operational data collected continuously by the platform

- Business usages data continuously gathered by the system

Implementation and usages Practices

With all these principles in mind (and without losing the goal of business valuable out of sight) let’s start building.

The sample.

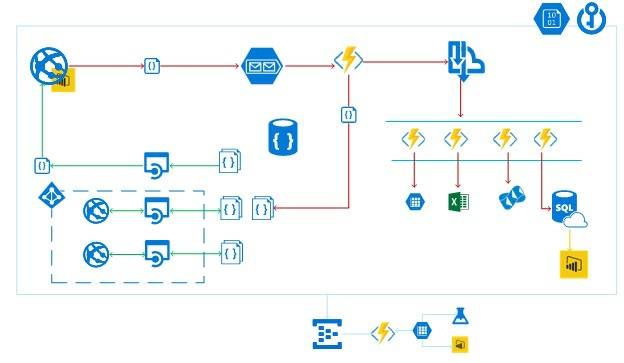

The system I’m using for explanation is a cloud-native services based system build a while ago during a hackathon, see: Building a DevOps Assessment tool with VSTS, Azure PaaS, and OneShare in minutes. It evolved to this.

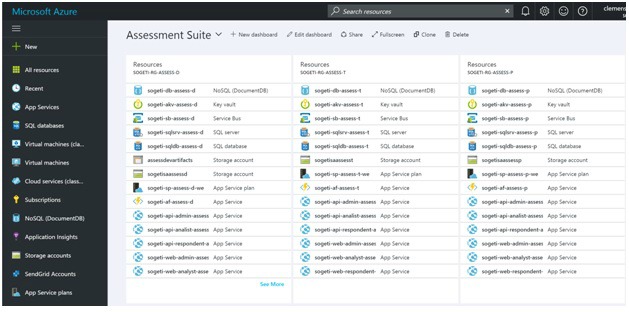

It contains several App Services (Web API and Functions), some backed up by AAD, a storage account, DocumentDB with several collections a Message bus topic with subscribers, Sendgrid and a SQL database for reporting via PowerBI. A small supporting subsystem for monitoring contains an Eventhub. Settings are secured within an Azure Key Vault.

The Structure.

An Azure Enterprise Agreement is organized in Departments, Subscriptions, and Resource Groups. The differentiation is more or less based on security and costs. Where subscriptions fit in a department, which is a kind of cost entity. A Resource Group contains the real functionality, the azure resources.

Subscriptions.

Azure subscriptions are structured based on business functionality, either a product or a business division, it should be the responsibility of one organizational unit within the EA subscription. Azure subscriptions can be divided based on access control, billing and or directory.

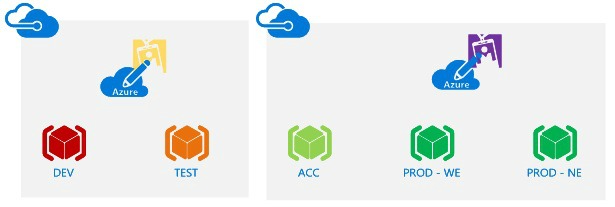

It is a best practice to create subscriptions for DEV/TEST and ACC/PROD. It helps separation costs for the environments and control access rights. It also common to have the dev/test subscription in a different EA tenant as ACC/Prod.

Note: Azure subscriptions in an EA portal can be created with MSDN benefits, only MSDN subscribers are allowed to access the subscription. These users have paid licenses for many Microsoft products and will have lower subscription costs.

Resource groups.

Azure Resource groups are a logical grouping of Azure resources in an azure subscription. Resource groups can be used for:

- Deploy topologies and their workloads consistently.

- Manage all your resources in an application together using resource groups.

- Apply role-based access control (RBAC) to grant appropriate access to users, groups, and services.

- Use tagging associations to streamline tasks such as billing rollups.

It is a good practice to separate the different environments (DTAP) in different resource groups.

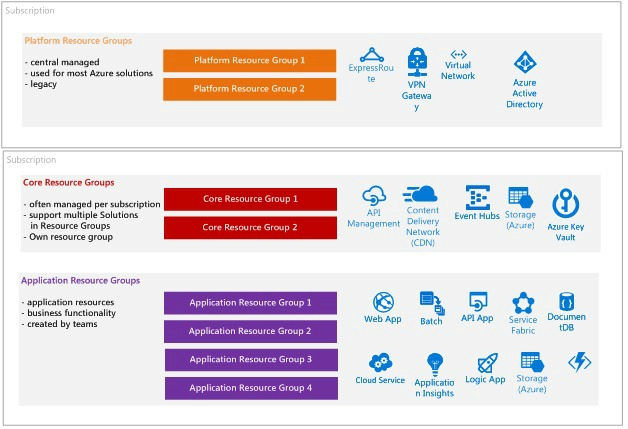

Another separation in resource groups is based on usages and responsibility categorization. Cloud systems not only exists of application components with a clear business usages. There are supporting Azure resources. You can compare them with the old on-premise network/ infrastructure and with middleware components, used by multiple applications and which needs to be in place to run the application components.

Cloud native systems also have these generic resources. The categories are:

- Platform category, resources in the platform category are resources which support the whole EA tenant or multiple subscriptions. They are more or less part of the platform. Resources are mainly network related, for example Express Route and VPN gateways. You will see this category azure resource within IaaS systems.

- Core category, Azure resources which support multiple ‘products’, multiple services are core components, middleware. A good example is Azure API Management and every other integration component. But also when an App Services plan is used by multiple Azure Apps (which can be deployed separately) is a core resource. Be careful with core resources.

- Application category, the business functionality, the services and components, the code. API apps, Web Apps etcetera. The application resources are clustered from the view of a service. A service which will contain business functionality which is as small as possible, that small that it still provides value as a single standalone instance. Not smaller not bigger. You can follow these practices on application resources:

- All artifacts in a resource group have the same version.

- Azure Resources in the Application category can be deployed without external dependencies on other Application category resource groups.

- The only dependency an Application category resource can have is on resources in the core and platform resource categories.

- By grouping resources in a resource group based on the business functionality the delivery of this business value can be done decoupled without having to take care or wait for other dependencies.

- Application type Resource groups will have a single owner.

- All resources in a resource group will have the same lifecycle.

A system should be divided into these categories where every category has its own resource group(s), RBAC and costs insights.

Core components in the sample application are the Azure Key Vault, Storage, DocumentDB, Event hub, SendGrid and the SQL Server.

The challenge is always, who is responsible for the Platform and Core resources. Core resources are a sign of danger. They support multiple services, they are a single point of failure, a centralized system. See Clemens Vasters presentation somewhere around 9:30.

This structure for Azure subscriptions and resource groups supports the principles of independent systems.

Versioning

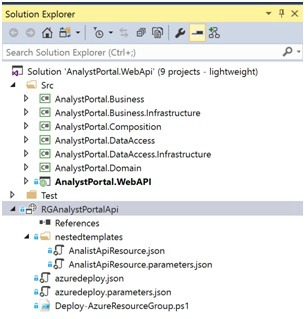

A service, a deployable component which can evolve independently, will have its own GIT repository. The GIT repository of a service will contain all the resources needed to release the service. This will be the ARM template, the external packages, the configuration and the sources. Actually, the same structure as for resource groups.

Core Resources ARM Templates and create database tables.

API App Application Resource with ARM template and bits.

The different GIT reports support the principles of decoupled systems and everything under versioning. It makes releasing the different components individually easy.

Build and Release.

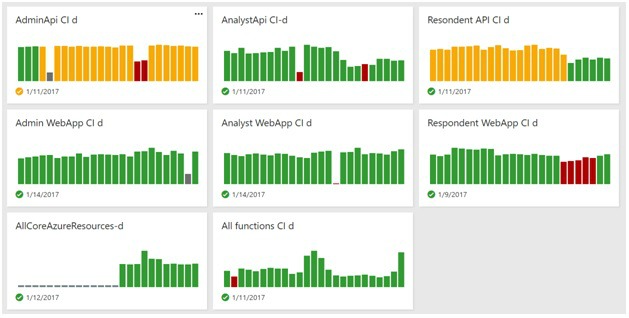

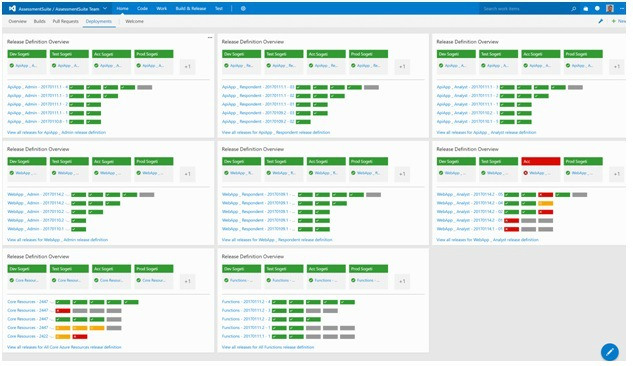

Build and release are fully automated and triggered by a code change, GIT pulls request. Every GIT repo will have its own build and release.

Build.

A build compiles, validates package and publish the artifacts and the ARM templates. Not only the sources are validated but also the ARM template should be validated, the PowerShell script build task validates the template on several company guidelines.

This is done for all services/ GIT repo. Which gives a nice overview of the state and quality of all components in our cloud-native system.

There is something wrong with an API.

Release.

A release contains 3 actions:

- provisioning of the azure resources (arm template),

- deployment of the bits (for application resources)

- the configuration of the system.

All actions are related, dependent and automated.

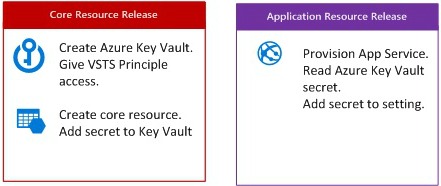

Most services depend on the Core resources, which should be provisioned first. This dependency is most often reflected in connection strings, key’s, id’s, etcetera, information which is different per environment and require a secure treatment. This is where the Azure Key Vault comes in.

During the provisioning of the core resources, all secrets which need to be shared across application resources are populated in the Azure Key Vault. Only a principle account which is known by Visual Studio Team Services has access rights to the Key Vault.

An Azure Key Vault is used per environment (DTAP)

Register a secret in the Azure Key Vault.

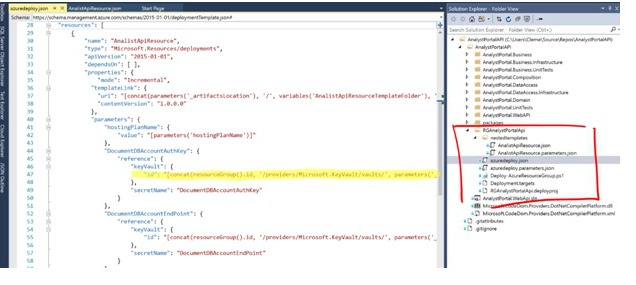

Read a secret from the Azure Key Vault. A nested ARM template is used to make the Azure Key Vault location dynamic for the different DTAP stages.

The number of parameters within an ARM template can grow enormous. Using an Azure Key Vault for secrets is a good solution for controlling this. But, putting all configuration settings in a Key Vault can get costly and a bit overdone. To minimize the number of parameters used during deployments a proper naming convention is needed.

The guideline for ARM’s is that one template and parameter file is used for all stages. The only variable used is the one that points which stage the ARM is deployed to.

The naming of the resources is constructed, based on this single parameter according to naming conventions. For example,

“[toLower(concat(parameters(‘customer’),’sa’,variables(‘AppVar’),substring(variables(‘storage’).skuTier, 0, 1),parameters(‘environment’),’1′))]”,

The release for an App Service within Visual Studio Team Services is pretty simple when all this is in place. Three steps:

- Provisioning Azure resource group, take the settings from the parameters and the secrets from the Key Vault for configuration purpose.

- Deploy the Application.

- Validate the deployment.

Clone the environment in VSTS Release, change the one environment variable and run the release again will result in a complete similar environment.

All this resulting in a fully automated release of all components, with a least privileged mindset. All stages have the same environment configuration and are completely decoupled from each other.

Transparency comes out of the box with Visual Studio Team Services. From a release, it is easy to dive in the executed tests, the commits with code changes, the reviewers, the discussions and the underlying product backlog items.

Still something wrong with one service 😉

The only thing what is left is the feedback loop back from the running system, the Azure Event Hub is used for it. But that is something for another post.

- Feedback fills the backlog.

- Feedback on running systems incorporated by default.

- Operational data collected continuously by the platform

- Business usages data continuously gathered by the system

Happy building.

English | EN

English | EN