INCREMENTAL TEST AUTOMATION DEVELOPMENT – SYNERGIES WITH NON-TEST AUTOMATION ENGINEER ACTIVITIES

May 29, 2019

In my preceding article, I mentioned the usage of Software Development Life Cycles (SDLC) for incremental implementation of a test automation solution (TAS). Also, the article speaks about the Test Automation Engineer (TAE) qualification offered by the International Software Testing Qualification Board® (ISTQB®). Test teams are usually interdisciplinary and a congregation of several specialists and field experts, including a test automation engineer. When implementing a SDLC into the test automation strategy, it should be clear that this will also mean full team dedication towards its success – as in any software implementation process, multiple roles are required to be fulfilled.

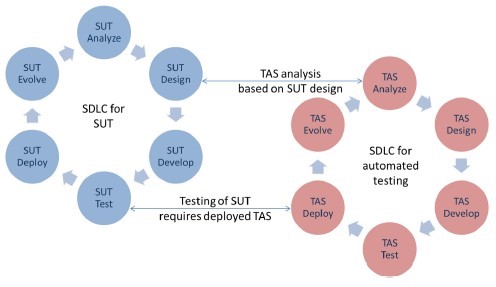

In this article, I assume the basic example of a TAS SLDC (see figure 1) being applied synchronously to the SLDC of a System under Test (SUT). As per TAE syllabus (see references) such structures could be much more complex.

Figure 1: Synchronization example of TAS and SUT development processes given by ISQTB® (see references)

In traditional test automation projects, it is a common concept to employ several kinds of specialists with set responsibilities. Therefore, it is expected by the TAE to specify, install, maintain the TAS. This is expected to be done by also continuously analyzing new software requirements and the SUT development regarding improvements or adjustments of the TAS. This leads to the question how a TAE could be supported by activities from other team members in an organized and structured way. Especially in fast pace environments like agile software development and deployment, it would be important to activate any possible synergies in between any development and software quality assurance activities.

Testing the TAS

Implementing a SLDC for a TAS will have multiple benefits inside a test project. Such a project will allow to identify and plan tasks according to an estimated velocity for test automation. The activities inside both SDLCs as given in figure 1 can also be used as an estimate for activities being beneficial for the development or maintenance of a TAS.

- SUT Design/TAS Analyze: As being seen in the figure, the arrow between the SUT Design and the TAS Analyze is bidirectional. Instead of having two parallel analysis, one by the TAE and one by the Test Analyst (TA), the analysis can directly deliver input for TAS design and Test design. The TA or TAE could even be the same resource, inexperience, it is recommended to have at least the TA deliver all necessary input for both.

- TAS Design: During the TAS Design, the results from the test analysis procreate a set of necessary requirements that have to be fulfilled by the TAS solution. Also, the set and designed test conditions deliver a direct input to which functionalities to implement, e.g. which keywords are necessary to deliver certain test capabilities. Especially, the test design has to take into consideration to identify a smoke test set to validate the TAS, especially in all functional abilities.

- TAS Develop: A sufficient TAS development can only take place when all needed requirements, not only by SUT but also by test design, have been covered during the TAS design. If not, bugs or some level of incapability will be introduced to the TAS which could create an unreasonable amount of waste. The test team has to be aware of such and work with the TAE to avoid this by all means necessary.

- TAS Test: Testing the TAS can be a very tricky part. Therefore, I recommend developing a kind of test set for the TAS as well. As with any software that needs validation, quality assurance of a tool that will be in use for some longer time should be necessary and be done carefully. Still, it should not be a big set of test cases. The best case would be to be able to run the development version of the TAS parallel to the already deployed TAS and to cross-check the results where possible or to validate the results if not possible. The goal should be to only bring a new version of the TAS when the test stage has been passed by the given criteria.

- TAS Deploy: If the acceptance criteria has been met, the TAS can be deployed and go live against the SUT. The test team should check the criteria together with the TAE.

- TAS Evolve: Open Bugs should be tracked inside defect management tools as in any SLDC. Also, identify improvements, features, and tasks to make the TAS better should be tracked and planned. As in any software project, you could e.g. use an own JIRA board.

This, of course, is only covering the short basics, there are a lot more complex tasks and processes to be taken into consideration. We at Sogeti are working on continuous integration and Dev Ops and are also precursors of test automation technology and processes.

[References]

Benefits of a Generic Test Automation Architecture, Toni Kraja, invoked on 21/05/2019 on https://labs.sogeti.com/benefits-of-generic-test-automation-architecture/

Manifesto for Agile Software Development, invoked on 30/03/2019 on https://agilemanifesto.org/iso/en/manifesto.html

English | EN

English | EN